Data Pipeline Management and Security Data Fabrics

In the recent past, DataBahn has been featured in 3 different narratives focused on security data –

- Cybersecurity experts like Cole Grolmus (Strategy of Security) discussing how DataBahn’s “Security Data Fabric” solution is unbundling security data collection from SIEMs (Post 1, Post 2)

- VCs such as Eric Fett of NGP Capital talking about how DataBahn’s AI-native approach to cybersecurity was revolutionizing enterprise SOCs attempts to combat alert fatigue and escalating SIEM costs (Blog)

- Most recently, Allie Mellen, a Principal Analyst at Forrester, shouted DataBahn out as a “Data Pipeline Management” product focusing on security use cases. (LinkedIn Post, Blog)

Being mentioned by these experts is a welcome validation. It is also a recognition that we are solving a relevant problem for businesses – and for these mentions to come from these different sources represents the perspectives from which we can consider our mission.

What are these experts saying?

Allie’s wonderful article (“If You’re Not Using Data Pipeline Management for Security and IT, You Need To”) expertly articulates how SIEM spending is increasing, and that SIEM vendors haven’t created effective tools for log size reduction or routing since it “… directly opposes their own interests: getting you to ingest more data into their platform and, therefore, spend more money with them.”

This aligns with what Cole alluded to, when he stated reasons why “Security Data Fabrics” shouldn’t be SIEMs“, pointing to this same conflict of interest. He added that these misaligned incentives spilled over into interoperability, where proprietary data formats and preferred destinations would promote vendor lock-in, which he had previously mentioned Security Data Fabrics were designed to overcome.

Eric’s blog was focused on identifying AI-native cybersecurity disrupters, where he identified DataBahn as one of the leading companies whose architecture was designed to leverage and support AI features, enabling seamless integration into their own AI assets to “ … overcome alert fatigue, optimize ingestion costs, and allocate resources to the most critical security risks.”

What is our point of view?

The reflections of these experts resonate with our conception of the problem we are trying to solve—SOCs and Data Engineering teams overwhelmed by the laborious task of data management and the prohibitive cost of the time and effort involved in overcoming it.

- SIEM ingest costs are too high. ~70% of the data being sent to SIEMs is not security-relevant. Logs have extra fields you don’t always need, and indexed data becomes 3-5x the original size. SIEM pricing data depends upon the volume of data being ingested and stored with them – which strains budgets and reduces the value that SOCs get from their SIEMs.

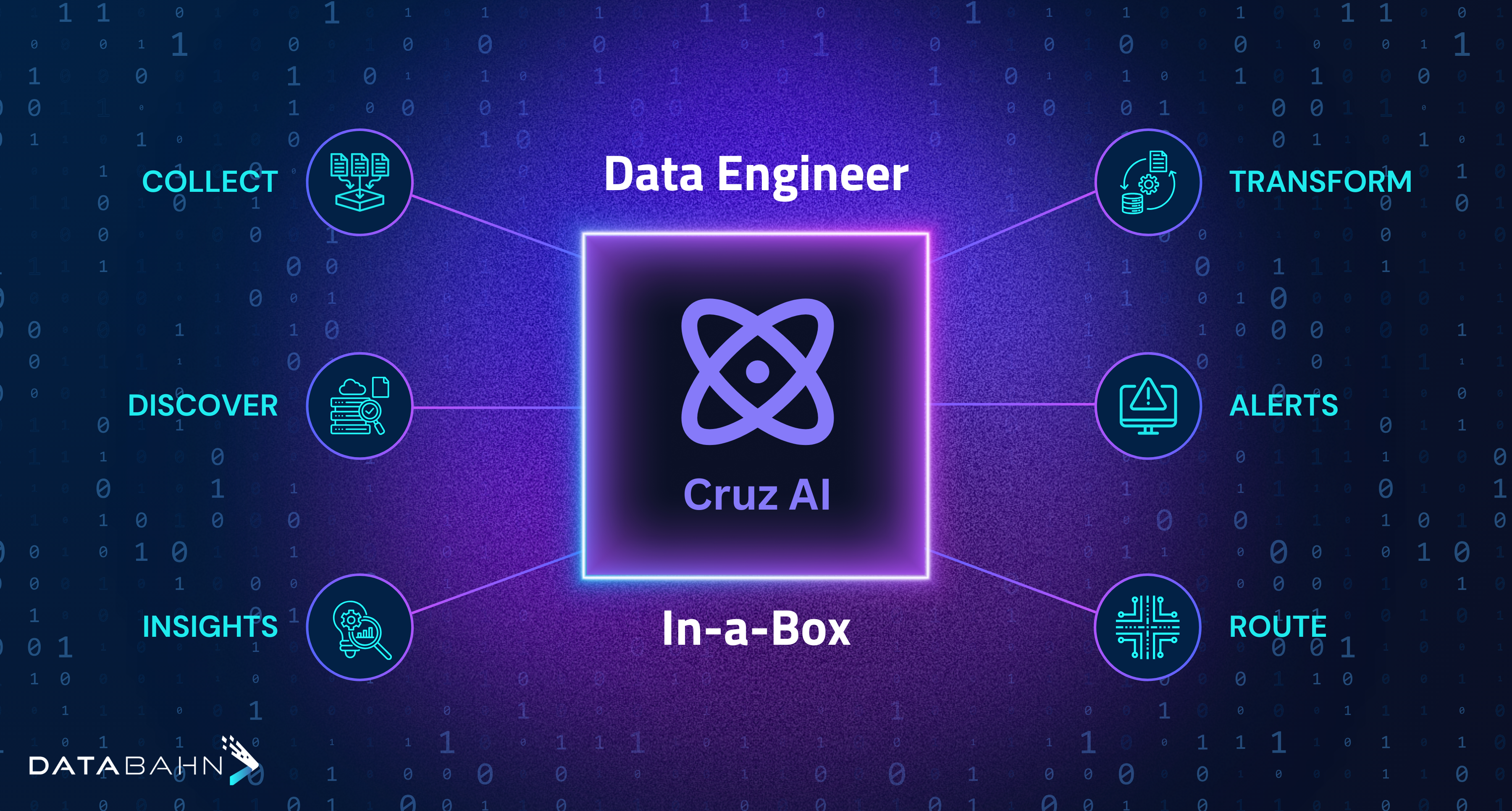

We deliver a 35%+ reduction in SIEM costs by reducing log sizes in 2-4 weeks – and our AI-enabled platform enables ongoing optimization to continue to reduce log sizes.

- SIEM being the source of data ingestion is also a problem. SIEMs are not very good at data ingestion. While some SIEM vendors have associated cloud environments (Sentinel, SecOps) with native ingestion tools, adding new sources – especially custom apps or sources with unstructured data – requires extensive data engineering effort and 4-8 weeks of integration. Additionally, managing these data pipelines is challenging, as these pipelines are single points of failure. Back pressure and spikes in data volumes can cause data loss.

DataBahn ensures loss-less data ingestion via a mesh architecture that ensures a secondary channel to ingest data in case of any blockage. It also tracks and identifies sudden changes in volume, helping to identify issues faster.

- Data Formats and Schemas are a challenge. SIEMs, UEBAs, Observability Tools, and different data storage destinations come with their proprietary formats and schemas, which add another manual task of data transformation onto data engineering teams. Proprietary formats and compliance requirements also create vendor lock-in situations, which add to your data team’s cost and effort.

We automate data transformation, ensuring seamless and effortless data standardization, data enrichment, and data normalization before forking the data to the destination of your choice.

Our solution is designed for specific security use cases, including a library of 400+ connectors and integrations and 900+ volume reduction rules to reduce SIEM log volumes, as well as support for all the major formats and schemas – which puts it ahead of generic DPM tools, something which Allie describes in her piece.

Cole has been at the forefront of conversations around Security Data Fabrics, and has called out that DataBahn has built the most complete platform/product in the space, with capabilities across integration & connectivity, data handling, observability & governance, reliability & performance, and AI/ML support.

Conclusion

We are grateful to be mentioned in these vital conversations about security data management and its future, and we appreciate the time and effort being spent by these experts to drive these conversations. We hope that this increases the awareness of Data Pipeline Management, Security Data Fabrics, and AI-native data management tools – a venn diagram we are pleased to be at the intersection of – and look forward to continue our journey in solving the problems that these experts have identified.