Connecting Amazon Security Lake to value (and data)

Seamlessly collect on-prem and data from third-party sources, convert logs to OCSF, and format it for Amazon Security Lake storage in Parquet.

Get started with Amazon Security Lake and DataBahn

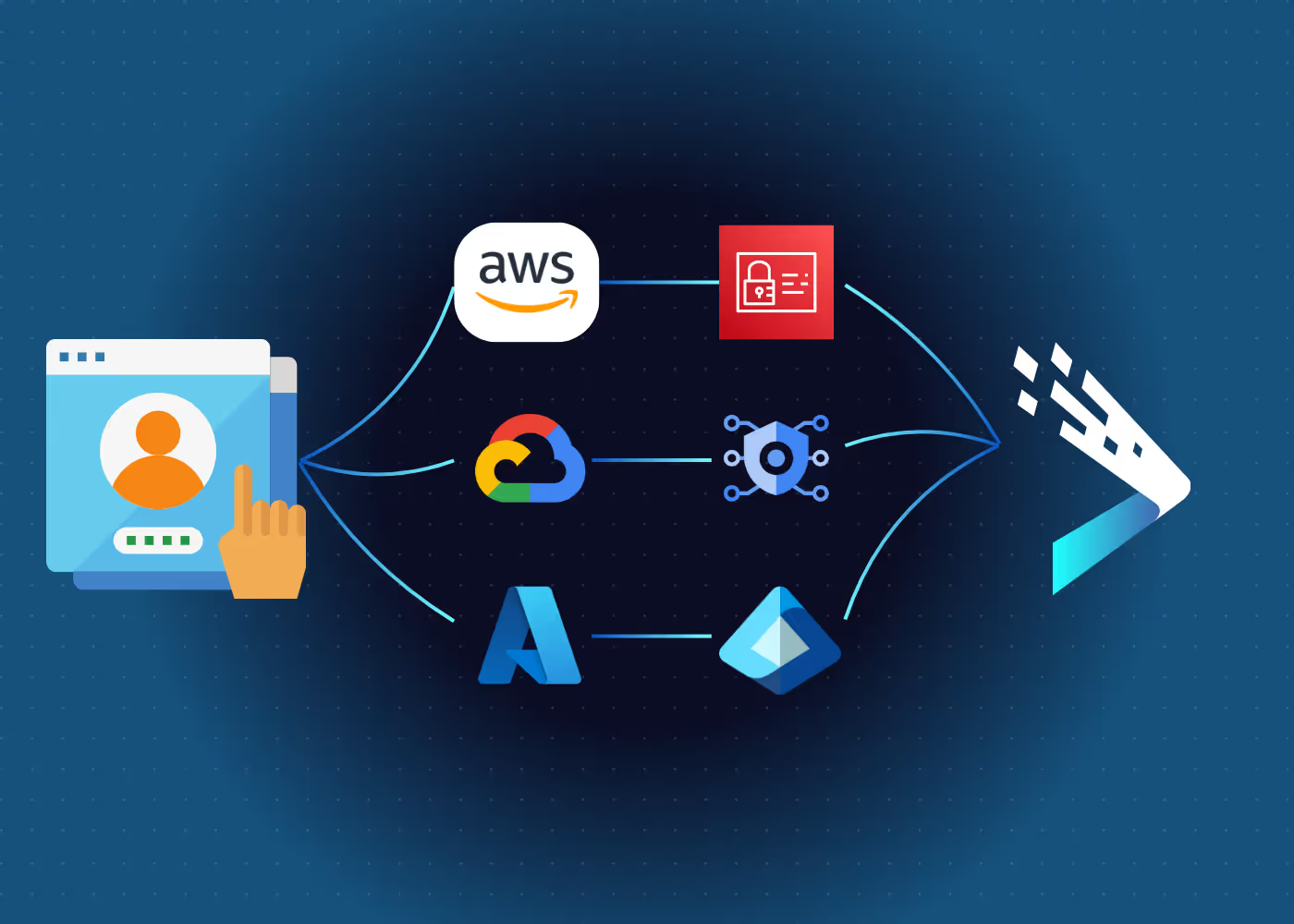

Amazon Security Lake helps organizations store and centralize their telemetry, but normalizing, amalgamating, and routing that data takes work. DataBahn automates the data engineering: unifying data into OCSF and storing it in different S3 buckets, enabling replay access and search, and optimizing data volume for in-transit data.

Prebuilt connectors to ingest data from non-AWS sources

Lower log volumes and Amazon S3 storage costs

Reduction in manual effort for parsing and transformation

Build or expand your Amazon Security Lake

Your starting point for all things DataBahn

Have Questions?

Here's what we hear often

What is a Data Fabric / Data Pipeline Management Platform?

A Data Fabric is an architecture that enables systems to connect with different sources and destinations for data, simplifying its movement and management using intelligent and automated systems, a unified view, and the potential for real-time access and analytics.

Data Fabrics manage security, application, observability, and IoT/OT data to automate and optimize data operations and engineering work for security, IT, and data teams. Data Fabrics are also an umbrella term for Data Pipeline Management (DPM) platforms, which are tools and systems that enable easier collection and ingestion of data from various sources.

DPM platforms are evaluated by how many sources and destinations they can manage and to what degree they can optimize the volume of data being orchestrated through them.

What makes DataBahn different from traditional DPM tools and platforms?

DataBahn goes beyond being a data pipeline by delivering a full-stack data management and AI transformation solution. We are the leading DPM solution (maximum number of integrations, most effective volume reductions, etc.) and also deliver AI-powered improvements that make us best-in-class.

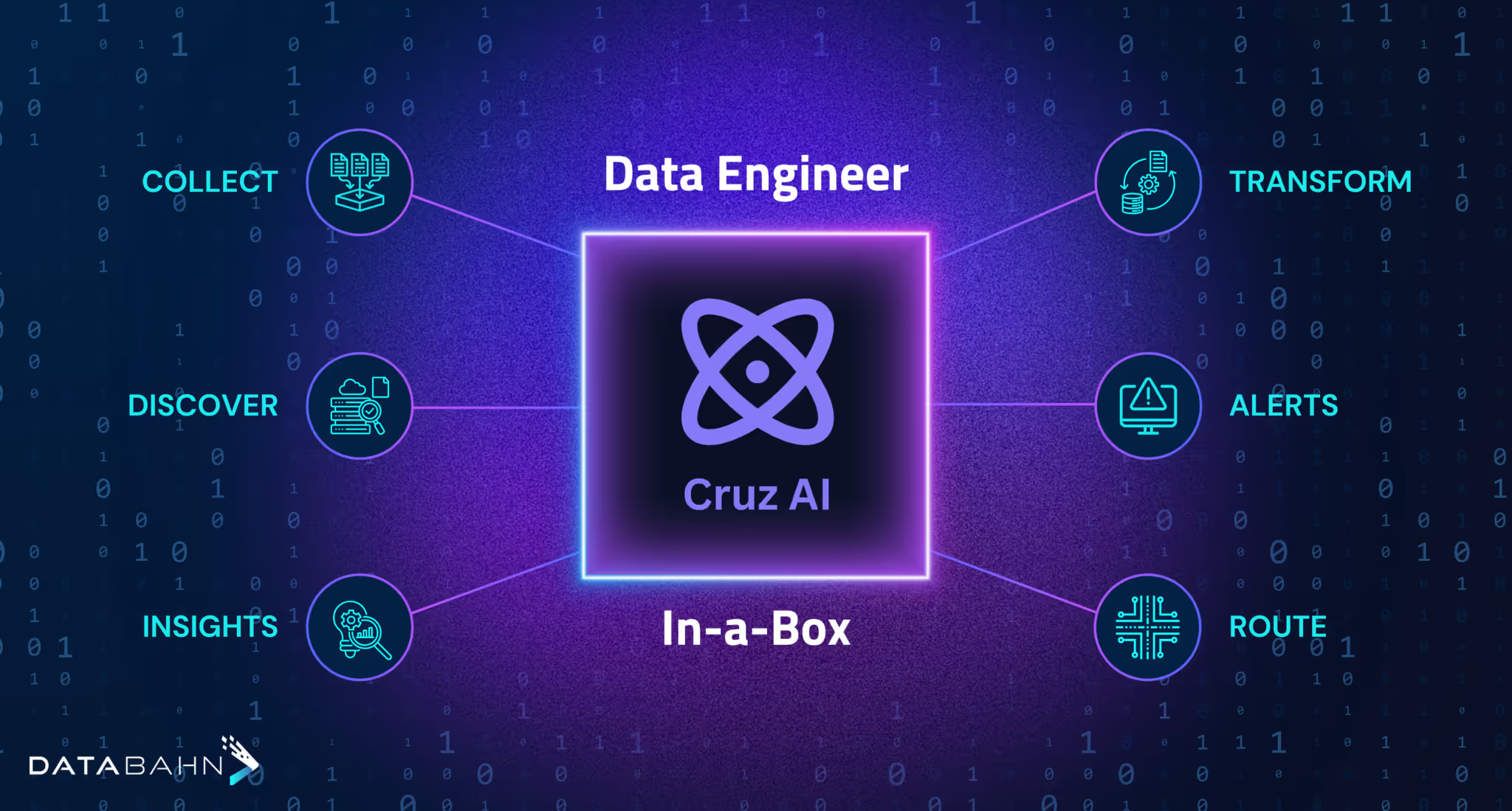

Our Agentic AI automates data engineering tasks by autonomously detecting log sources, creating pipelines, and parsing structured and unstructured data from standard and custom applications. It also tracks, monitors, and manages data flow, using backups and flagging any errors to ensure the data flows through.

Our system collates, correlates, and tags the data to enable simplified access, and creates a database that enables custom AI agent or AI application development.

Will the volume control and reduction of my tools reduce my tools' effectiveness? Is the volume reduction something I can control?

Our volume reduction functionality is completely under the control of our users. There are two modules - a library of volume reduction rules that will reduce SIEM and observability data.

Some of these rules are absolutely guaranteed not to impact their functioning (data deduplication, for example) while others are based on our collective experience of being less relevant or useful.

Users can absolutely control which data goes where, and opt to keep sending those volumes to their SIEM or Observability solution.

Ready to accelerate towards Data Utopia?

Experience the speed, simplicity, and power of our AI-powered data fabric platform.

with a personalized test drive.

%20(3).avif)

.avif)

.avif)