The DataBahn Blog

The latest articles, news, blogs and learnings from Databahn

Popular Posts

McKinsey says ML pipelines are the future of banking

In their article about how banks can extract value from a new generation of AI technology, notable strategy and management consulting firm McKinsey identified AI-enabled data pipelines as an essential part of the ‘Core Technology and Data Layer’. They found this infrastructure to be necessary for AI transformation, as an important intermediary step in the evolution banks and financial institutions will have to make for them to see tangible results from their investments in AI.

The technology stack for the AI-powered banking of the future relies greatly on an increased focus on managing enterprise data better. McKinsey’s Financial Services Practice forecasts that with these tools, banks will have the capacity to harness AI and “… become more intelligent, efficient, and better able to achieve stronger financial performance.”

What McKinsey says

The promise of AI in banking

The authors point to increased adoption of AI across industries and organizations, but the depth of the adoption remains low and experimental. They express their vision of an AI-first bank, which -

- Reimagines the customer experience through personalization and streamlined, frictionless use across devices, for bank-owned platforms and partner ecosystems

- Leverages AI for decision-making, by building the architecture to generate real-time insights and translating them into output which addresses precise customer needs. (They could be talking about Reef)

- Modernizes core technology with automation and streamlined architecture to enable continuous, secure data exchange (and now, Cruz)

They recommend that banks and financial service enterprises set a bold vision for AI-powered transformation, and root the transformation in business value.

AI stack powered by multiagent systems

The true potential of AI will require banks of the future to tread beyond just AI models, the authors claim. With embedding AI into four capability layers as the goal, they identify ‘data and core tech’ as one of those four critical components. They have augmented an earlier AI capability stack, specifically adding data preprocessing, vector databases, and data post-processing to create an ‘enterprise data’ part of the ‘core technology and data layer’. They indicate that this layer would build a data-driven foundation for multiple AI agents to deliver customer engagement and enable AI-powered decision-making across various facets of a bank’s functioning.

Our perspective

Data quality is the single greatest predictor of LLM effectiveness today, and our current generation of AI tools are fundamentally wired to convert large volumes of data into patterns, insights, and intelligence. We believe the true value of enterprise AI lies in depth, where Agentic AI modules can speak and interact with each other while automating repetitive tasks and completing specific and niche workstreams and workflows. This is only possible when the AI modules have access to purposeful, meaningful, and contextual data to rely on.

We are already working with multiple banks and financial services institutions to enable data processing (pre and post), and our Cruz and Reef products are deployed in many financial institutions to become the backbone of their transformation into AI-first organizations.

Are you curious to see how you can come closer to building the data infrastructure of the future? Set up a call with our experts to see what’s possible when data is managed with intelligence.

%20(3).avif)

DataBahn.ai raises $17Mn in Series A to reimagine security data management

Two years ago, our DataBahn journey began with a simple yet urgent realization: security data management is fundamentally flawed. Enterprises are overwhelmed by security and telemetry, struggling to collect, store, and process it, while finding it harder and harder to gain timely insights from it. As leaders and practitioners in cybersecurity, data engineering, and data infrastructure, we saw this pattern everywhere: spiraling SIEM costs, tool sprawl, noisy data, tech debt, brittle pipelines, and AI initiatives blocked by legacy systems and architectures.

We founded DataBahn to break this cycle. Our platform is specifically designed to help enterprises regain control: disconnecting data pipelines from outdated tools, applying AI to automate data engineering, and constructing systems that empower security, data, and IT teams. We believe data infrastructure should be dynamic, resilient, and scalable, and we are creating systems that leverage these core principles to enhance efficiency, insight, and reliability.

Today, we’re announcing a significant milestone in this journey: a $17M Series A funding round led by Forgepoint Capital, with participation from S3 Ventures and returning investor GTM Capital. Since coming out of stealth, our trajectory has been remarkable – we’ve secured a Fortune 10 customer and have already helped several Fortune 500 and Global 200 companies cut over 50% of their telemetry processing costs and automate most of their data engineering workloads. We're excited by this opportunity to partner with these incredible customers and investors to reimagine how telemetry data is managed.

Tackling an industry problem

As operators, consultants, and builders, we worked with and interacted with CISOs across continents who complained about how they had gone from managing gigabytes of data every month to being drowned by terabytes of data daily, while using the same pipelines as before. Layers and levels of complexity were added by proprietary formats, growing disparity in sources and devices, and an evolving threat landscape. With the advent of Generative AI, CISOs and CIOs found themselves facing an incredible opportunity wrapped in an existential threat, and without the right tools to prepare for it.

DataBahn is setting a new benchmark for how modern enterprises and their CISO/CIOs can manage and operationalize their telemetry across security, observability, and IOT/OT systems and AI ecosystems. Built on a revolutionary AI-driven architecture, DataBahn parses, enriches, and suppresses noise at scale, all while minimizing egress costs. This is the approach our current customers are excited about, because it addresses key pain points they have been unable to solve with other solutions.

Our two new Agentic AI products are solving problems for enterprise data engineering and analytics teams. Cruz automates complex data engineering tasks from log discovery, pipeline creation, tracking and maintaining telemetry health, to providing insights on data quality. Reef surfaces context-aware and enriched insights from streaming telemetry data, turning hours of complex querying across silos into seconds of natural-language queries.

The Right People

We’re incredibly grateful to our early customers; their trust, feedback, and high expectations have shaped who we are. Their belief drives us every day to deliver meaningful outcomes. We’re not just solving problems with them, we’re building long-term partnerships to help enterprise security and IT teams take control of their data, and design systems that are flexible, resilient, and built to last. There’s more to do, and we’re excited to keep building together.

We’re also deeply thankful for the guidance and belief of our advisors, and now our investors. Their support has not only helped us get here but also sharpened our understanding of the opportunity ahead. Ernie, Aaron, and Saqib’s support has made this moment more meaningful than the funding; it’s the shared conviction that the way enterprises manage and use data must fundamentally change. Their backing gives us the momentum tomove faster, and the guidance to keep building towards that mission.

Above all, we want to thank our team. Your passion, resilience, and belief in what we’re building together are what got us here. Every challenge you’ve tackled, every idea you’ve contributed, every late night and early morning has laid the foundation for what we have done so far and for what comes next. We’re excited about this next chapter and are grateful to have been on this journey with all of you.

The Next Chapter

The complexity of enterprise data management is growing exponentially. But we believe that with the right foundation, enterprises can turn that complexity into clarity, efficiency, and competitive advantage.

If you’re facing challenges with your security or observability data, and you’re ready to make your data work smarter for AI, we’d love to show you what DataBahn can do. Request a demo and see how we can help.

Onwards and upwards!

Nanda and Nithya

Cofounders, DataBahn

.avif)

The Cybersecurity Alert Fatigue Epidemic

In September 2022, cybercriminals accessed, encrypted, and stole a substantial amount of data from Suffolk County’s IT systems, which included personally identifiable information (PII) of county residents, employees, and retirees. Although Suffolk County did not pay the ransom demand of $2.5 million, it ultimately spent $25 million to address and remediate the impact of the attack.

Members of the county’s IT team reported receiving hundreds of alerts every day in the weeks leading up to the attack. Several months earlier, frustrated by the excessive number of unnecessary alerts, the team redirected the notifications from their tools to a Slack channel. Although the frequency and severity of the alerts increased leading up to the September breach, the constant stream of alerts wore the small team down, leaving them too exhausted to respond and distinguish false positives from relevant alerts. This situation created an opportunity for malicious actors to successfully circumvent security systems.

The alert fatigue problem

Today, cybersecurity teams are continually bombarded by alerts from security tools throughout the data lifecycle. Firewalls, XDRs/EDRs, and SIEMs are among the common tools that trigger these alerts. In 2020, Forrester reported that SOC teams received 11,000 alerts daily, and 55% of cloud security professionals admitted to missing critical alerts. Organizations cannot afford to ignore a single alert, yet alert fatigue (and an overwhelming number of unnecessary alerts) causes SOCs to miss up to 30% of security alerts that go uninvestigated or are completely overlooked.

While this creates a clear cybersecurity and business continuity problem, it also presents a pressing human issue. Alert fatigue leads to cognitive overload, emotional exhaustion, and disengagement, resulting in stress, mental health concerns, and attrition. More than half of cybersecurity professionals cite their workload as the primary source of stress, two-thirds reported experiencing burnout, and over 60% of cybersecurity professionals surveyed stated it contributed to staff turnover and talent loss.

Alert fatigue poses operational challenges, represents a critical security risk, and truly becomes an adversary of the most vital resource that enterprises rely on for their security — SOC professionals doing their utmost to combat cybercriminals. SOCs are spending so much time and effort triaging alerts and filtering false positives that there’s little room for creative threat hunting.

Data is the problem – and the solution

Alert fatigue is a result, not a root cause. When these security tools were initially developed, cybersecurity teams managed gigabytes of data each month from a limited number of computers on physically connected sites. Today, Security Operations Centers (SOCs) are tasked with handling security data from thousands of sources and devices worldwide, which arrive through numerous distinct devices in various formats. The developers of these devices did not intend to simplify the lives of security teams, and the tools they designed to identify patterns often resemble a fire alarm in a volcano. The more data that is sent as an input to these machines, the more likely they are to malfunction – further exhausting and overwhelming already stretched security teams.

Well-intentioned leaders advocate for improved triaging, the use of automation, refined rules to reduce false-positive rates, and the application of popular technologies like AI and ML. Until we can stop security tools from being overwhelmed by large volumes of unstructured, unrefined, and chaotic data from diverse sources and formats, these fixes will be band aids on a gaping wound.

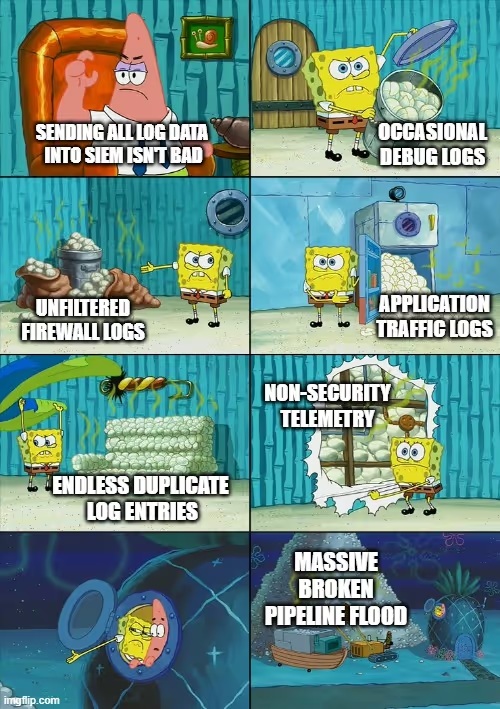

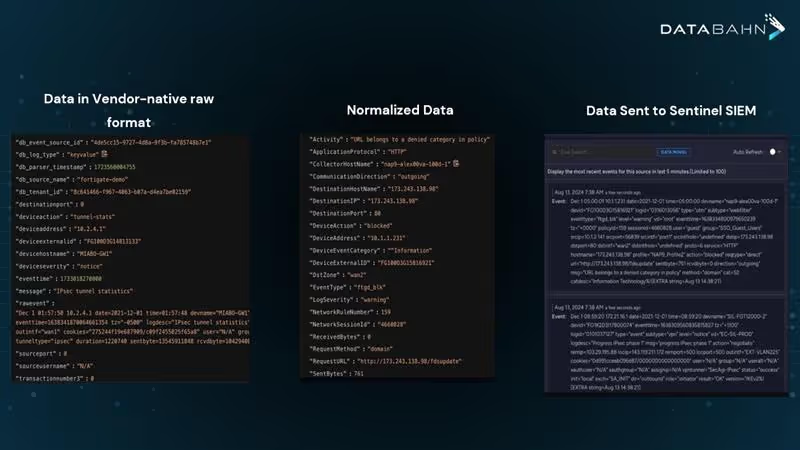

The best way to address alert fatigue is to filter out the data being ingested into downstream security tools. Consolidate, correlate, parse, and normalize data before it enters your SIEM or UEBA. If it isn’t necessary, store it in blob storage. If it’s duplicated or irrelevant, discard it. Don’t clutter your SIEM with poor data so it doesn’t overwhelm your SOC with alerts no one requested.

How Databahn helps

At DataBahn, we help enterprises cut through cybersecurity noise with our security data pipeline solution, which works around the clock to:

1. Aggregates and normalizes data across tools and environments automatically

2. Uses AI-driven correlation and prioritization

3. Denoises the data going into the SIEM, ensuring more actionable alerts with full context

SOCs using DataBahn aren’t overwhelmed with alerts; they only see what’s relevant, allowing them to respond more quickly and effectively to threats. They are empowered to take a more strategic approach in managing operations, as their time isn’t wasted triaging and filtering out unnecessary alerts.

Organizations looking to safeguard their systems – and protect their SOC members – should shift from raw alert processing to smarter alert management, driven by an intelligent pipeline which combines automation, correlation, and transformation that filters out the noise and combats alert fatigue.

Interested in saving your SOC from alert fatigue? Contact DataBahn

In the past, we've written about how we solve this problem for Sentinel. You can read more here: AI-powered Sentinel Log Optimization

The Rise of Headless Cybersecurity

I see a lot more organizations head towards Headless Cyber Architecture. Traditionally, cybersecurity teams relied on one massive tool: the SIEM. For years, Cyber security orgs funneled all their cyber data into it; not because it was optimal, but because it was the compliance checkbox.

That’s how SIEMs earned their core seat at the table. Over time, SIEMs evolved from a log aggregator into something more sophisticated: UEBA ->Security Analytics -> and now, increasingly, SaaS-based platforms to more AI SOC. But there’s a catch—in this model, you don’t truly own your data. It lives in the vendor’s ecosystem, locked into their proprietary format rather than an open standard.

You end up paying for storage, analytics, and access to your own telemetry—creating a cycle of dependency and vendor lock-in.

But the game is changing. What’s New?

SIEMs are not going away; they remain mission-critical. But they’re no longer the sole destination for all cyber data. Instead, they are being refocused: They now consume only Security-Relevant Data (SRDs)—purposefully curated feeds for advanced threat detection, correlation, and threat chaining. Nearly 80% of organizations have only integrated baseline telemetry—firewalls, endpoints, XDRs, and the like. But where’s the visibility into mission-critical apps? Your plant data? Manufacturing systems? The rest of your telemetry often remains siloed, unparsed, and not in open, interoperable formats like OTEL or OCSF.

The shift is this : It’s now flowing into your Security Data Lake (SDL)—parsed, normalized, enriched with context like threat intel, HR systems, identity, and geo signals. This data increasingly lives in your environment: Databricks. Snowflake. Amazon Web Services (AWS), Microsoft Azure, Google Cloud, Hydrolix.

With this shift, a new category is exploding: headless cybersecurity products—tools that sit on top of your data rather than ingesting it.

· Headless SIEMs: Built for detection, not data hoarding.

· Headless Vulnerability Analytics: Operating directly on vuln data inside your SDL.

· Headless Data Science: ML models run atop your lake, no extraction needed.

· Soon: Headless IAM & Access Analytics: Compliance and reporting directly from where access logs reside.

These solutions don’t route your data out—they bring their algorithm to your lake. This flips the control model.

To Get There: The Data Pipeline Must Evolve

What’s needed is an independent platform purpose-built for streaming ETL and pipeline management, the connective tissue that moves, filters, and enriches your telemetry in real time. A platform that’s-

· Lightweight and modular—drop a node anywhere to start collecting from a new business unit or acquisition.

· Broadly integrated—connecting with thousands of systems to maximize visibility.

· Smart at filtering—removing up to 60%-80% of Non-Security Data (NSDs) that bloats your SIEM

· Enrichment-first—applying threat intel, identity, geo, and other contextual data before forwarding to your Security Data Lake (SDL) and SIEM. Remember, analysts spend valuable time manually stitching together context during investigations. Pre-enriched data dramatically reduces that effort—cutting investigation time, improving accuracy, and accelerating response.

· AI-ready—feeding clean, contextualized data into your models, reducing noise and improving MTTD/MTTR. Also helps desanitize sensitive information leaving your environment.

· Insightful in motion—offering real-time observability as data flows through the pipeline.

In short, the pipeline becomes the foundation for modern security architecture and the fuel for your AI-driven transformation.

With this shift, a new category is exploding: headless cybersecurity products—tools that sit on top of your data rather than ingesting it.

Bottom Line : We’re entering a new era where

· SIEMs do less ingestion, more detection

· Data lakes become the source of truth, enriched, stored in your format

· Vendors no longer take your data—they work on top of it

· Security teams get flexibility, visibility, and control—without the lock-in

This is the rise of modular, headless cybersecurity—

This is the rise of modular, headless cybersecurity, where your data stays yours. Their analytics run where you want and computing happens on your terms, all while you have complete control over your data.

Security Data Pipeline Platforms: the Data Layer Optimizing SIEMs and SOCs

DataBahn recognized as leading vendor in SACR 2025 Security Data Pipeline Platforms Market Guide

As security operations become more complex and SOCs face increasingly sophisticated threats, the data layer has emerged as the critical foundation. SOC effectiveness now depends on the quality, relevance, and timeliness of data it processes; without a robust data layer, SIEM-based analytics, detection, and response automation crumble under the deluge of irrelevant data and unreliable insights.

Recognizing the need to engage with current SIEM problems, security leaders are adopting a new breed of security data tools known as Security Data Pipeline Platforms. These platforms sit beneath the SIEM, acting as a control plane for ingesting, enriching, and routing security data in real time. In its 2025 Market Guide, SACR explores this fast-emerging category and names DataBahn among the vendors leading this shift.

Understanding Security Data Pipelines: A New Approach

The SACR report highlights this breaking point: organizations typically collect data from 40+ security tools, generating terabytes daily. This volume overwhelms legacy systems, creating three critical problems:

First, prohibitive costs force painful tradeoffs between security visibility and budget constraints. Second, analytics performance degrades as data volumes increase. Finally, security teams waste precious time managing infrastructure rather than investigating threats.

Fundamentally, security data pipeline platforms partially or fully resolve the data volume problems with differing outcomes and performance. DataBahn decouples collection from storage and analysis, automates and simplifies data collection, transformation, and routing. This architecture reduces costs while improving visibility and analytic capabilities—the exact opposite of the traditional, SIEM-based approach.

AI-Driven Intelligence: Beyond Basic Automation

The report examines how AI is reshaping security data pipelines. While many vendors claim AI capabilities, few have integrated intelligence throughout the entire data lifecycle.

DataBahn's approach embeds intelligence at every layer of the security data pipeline. Rather than simply automating existing processes, our AI continually optimizes the entire data journey—from collection to transformation to insight generation.

This intelligence layer represents a paradigm shift from reactive to proactive security, moving beyond "what happened?" to answering "what's happening now, and what should I do about it?"

Take threat detection as an example: traditional systems require analysts to create detection rules based on known patterns. DataBahn's AI continually learns from your environment, identifying anomalies and potential threats without predefined rules.

The DataBahn Platform: Engineered for Modern Security Demands

In an era where security data is both abundant and complex, DataBahn's platform stands out by offering intelligent, adaptable solutions that cater to the evolving needs of security teams.

Agentic AI for Security Data Engineering: Our agentic AI, Cruz, automates the heavy lifting across your data pipeline—from building connectors to orchestrating transformations. Its self-healing capabilities detect and resolve pipeline issues in real-time, minimizing downtime and maintaining operational efficiency.

Intelligent Data Routing and Cost Optimization: The platform evaluates telemetry data in real-time, directing only high-value data to cost-intensive destinations like SIEMs or data lakes. This targeted approach reduces storage and processing costs while preserving essential security insights.

Flexible SIEM Integration and Migration: DataBahn's decoupled architecture facilitates seamless integration with various SIEM solutions. This flexibility allows organizations to migrate between SIEM platforms without disrupting existing workflows or compromising data integrity.

Enterprise-Wide Coverage: Security, Observability, and IoT/OT: Beyond security data, DataBahn's platform supports observability, application, and IoT/OT telemetry, providing a unified solution for diverse data sources. With 400+ prebuilt connectors and a modular architecture, it meets the needs of global enterprises managing hybrid, cloud-native, and edge environments.

Next-Generation Security Analytics

One of DataBahn’s standout features highlighted by SACR is our newly launched "insights layer”—Reef. Reef transforms how security professionals interact with data through conversational AI. Instead of writing complex queries or building dashboards, analysts simply ask questions in natural language: "Show me failed login attempts for privileged users in the last 24 hours" or "Show me all suspicious logins in the last 7 days"

Critically, Reef decouples insight generation from traditional ingestion models, allowing Security analysts to interact directly with their data, gain context-rich insights without cumbersome queries or manual analysis. This significantly reduces the mean time to detection (MTTD) and response (MTTR), allowing teams to prioritize genuine threats quickly.

Moving Forward with Intelligent Security Data Management

DataBahn's inclusion in the SACR 2025 Market Guide affirms our position at the forefront of security data innovation. As threat environments grow more complex, the difference between security success and failure increasingly depends on how effectively organizations manage their data.

We invite you to download the complete SACR 2025 Market Guide to understand how security data pipeline platforms are reshaping the industry landscape. For a personalized discussion about transforming your security operations, schedule a demo with our team. Click here

In today's environment, your security data should work for you, not against you.

Telemetry Data Pipelines - and how they impact decision-making for enterprises

Telemetry Data Pipelines

and how they impact decision-making for enterprises

For effective data-driven decision-making, decision-makers must access accurate and relevant data at the right time. Security, sales, manufacturing, resource, inventory, supply chain, and other business-critical data help inform critical decisions. Today’s enterprises need to aggregate relevant data from around the world and various systems into a single location for analysis and presentation to leaders in a digestible format in real time for them to make these decisions effectively.

Why telemetry data pipelines matter

Today, businesses of all sizes need to collect information from various sources to ensure smooth operations. For instance, a modern retail brand must gather sales data from multiple storefronts across different locations, its website, and third-party sellers like e-commerce and social media platforms to understand how their products performed. It also helps inform decisions such as inventory, stocking, pricing, and marketing.

For large multi-national commercial enterprises, this data and its importance get magnified. Executives have to make critical decisions with millions of dollars at stake and in an accelerated timeline. They also have more complex and sophisticated systems with different applications and digital infrastructures that generate large amounts of data. Both old and new-age companies must build elaborate systems to connect, collect, aggregate, make sense of, and derive insights from this data.

What is a telemetry data pipeline?

Telemetry data encompasses various types of information captured and collected from remote and hard-to-reach sources. The term ‘telemetry’ originates from the French word ‘télémètre’, which means a device for measuring (“mètre”) data from afar (“télé”). In the context of modern enterprise businesses, telemetry data includes application logs, events, metrics, and performance indicators which provide essential information that helps run, maintain, and optimize systems and operations.

A telemetry pipeline, as the name implies, is the infrastructure that collects and moves the data from the source to the destination. But a telemetry data pipeline doesn’t just move data; it also aggregates and processes this data to make it usable, and routes it to the necessary analytics or security destinations where it can be used by leaders to make important decisions.

Core functions of a telemetry data pipeline

Telemetry data pipelines have 3 core functions:

- Collecting data from multiple sources;

- Processing and preparing the data for analysis; and

- Transferring the data to the appropriate storage destination.

DATA COLLECTION

The first phase of a data pipeline is collecting data from various sources. These sources can include products, applications, servers, datasets, devices, and sensors, and they can be spread across different networks and locations. The collection of this data from these different sources and moving them towards a central repository is the first part of the data lifecycle.

Challenges: With the growing number of sources, IT and data teams find it difficult to integrate new ones. API-based integrations can take between four to eight weeks for an enterprise data engineering team, placing significant demands on technical engineering bandwidth. Monitoring and tracking sources for anomalous behavior, identifying blocked data pipelines, and ensuring the seamless flow of telemetry data are major pain points for enterprises. With data volumes growing at ~30% Y-o-Y, being able to scale data collection to manage spikes in data flow is an important problem for engineering teams to solve, but they don’t always have the time and effort to invest in such a project.

DATA PROCESSING & PREPARATION

The second phase of a data pipeline is aggregating the data, which requires multiple data operations such as cleansing, de-duplication, parsing, and normalization. Raw data is not suitable for leaders to make decisions, and it needs to be aggregated from different sources. Data from different sources have to be turned into the same format, stitched together for correlation and enrichment, and prepared to be further refined for further insights and decision-making.

Challenges: Managing the different formats and parsing it can get complicated; and with many enterprises building or having built custom applications, parsing and normalizing that data is challenging. Changing log and data schemas can create cascading failures in your data pipeline. Then there are challenges such as identifying and masking sensitive data and quarantining it to protect PII from being leaked.

DATA ROUTING

The final stage is taking the data to its intended destination – a data lake or lakehouse, a cloud storage service, or an observability or security tool. For this, data has to be put into a specific format and has to be optimally segregated to avoid the high cost of the real-time analysis tools.

Challenges: Different types of telemetry data have different values, and segregating the data optimally to manage and reduce the cost of expensive SIEM and observability tools is high priority for most enterprise data teams. The ‘noise’ in the data also causes an increase in alerts and makes it harder for teams to find relevant data in the stream coming their way. Unfortunately, segregating and filtering the data optimally is difficult as engineers can't predict what data is useful and what data isn’t. Additionally, the increasing volume of data with the stagnant IT budget means that many teams are making sub-optimal choices of routing all data from some noisy sources into long-term storage, meaning that some insights are lost.

How can we make telemetry data pipelines better?

Organizations today generate terabytes of data daily and use telemetry data pipelines to move the data in real-time to derive actionable insights that inform important business decisions. However, there are major challenges in building and managing telemetry data pipelines, even if they are indispensable.

Agentic AI solves for all these challenges and is capable of delivering greater efficiency in managing and optimizing telemetry data pipeline health. An agentic AI can –

- Discover, deploy, and integrate with new data sources instantly;

- Parse and normalize raw data from structured and unstructured sources;

- Track and monitor pipeline health; be modular and sustain loss-less data flow;

- Identify and quarantine sensitive and PII data instantly;

- Manage and fix for schema drift and data quality;

- Segregate and evaluate data for storage in long-term storage, data lakes, or SIEM/observability tools

- Automate the transformation of data into different formats for different destinations;

- Save engineering team bandwidth which can be deployed on more strategic priorities

Curious about how agentic AI can solve your data problems? Get in touch with us to explore Cruz, our agentic AI data-engineer-in-a-box to solve your telemetry data challenges.

Reduced Alert Fatigue: 50% Log Volume Reduction with AI-powered log prioritization

Reduce Alert Fatigue in Microsoft Sentinel

AI-powered log prioritization delivers 50% log volume reduction

Microsoft Sentinel has rapidly become the go-to SIEM for enterprises needing strong security monitoring and advanced threat detection. A Forrester study found that companies using Microsoft Sentinel can achieve up to a 234% ROI. Yet many security teams fall short, drowning in alerts, rising ingestion costs, and missed threats.

The issue isn’t Sentinel itself, but the raw, unfiltered logs flowing into it.

As organizations bring in data from non-Microsoft sources like firewalls, networks, and custom apps, security teams face a flood of noisy, irrelevant logs. This overload leads to alert fatigue, higher costs, and increased risk of missing real threats.

AI-powered log ingestion solves this by filtering out low-value data, enriching key events, and mapping logs to the right schema before they hit Sentinel.

Why Security Teams Struggle with Alert Overload (The Log Ingestion Nightmare)

According to recent research by DataBahn, SOC analysts spend nearly 2 hours daily on average chasing false positives. This is one of the biggest efficiency killers in security operations.

Solutions like Microsoft Sentinel promise full visibility across your environment. But on the ground, it’s rarely that simple.

There’s more data. More dashboards. More confusion. Here are two major reasons security teams struggle to see beyond alerts on Sentinel.

- Built for everything, overwhelming for everyone

Microsoft Sentinel connects with almost everything: Azure, AWS, Defender, Okta, Palo Alto, and more.

But more integrations mean more logs. And more logs mean more alerts.

Most organizations rely on default detection rules, which are overly sensitive and trigger alerts for every minor fluctuation.

Unless every rule, signal, and threshold is fine-tuned (and they rarely are), these alerts become noise, distracting security teams from actual threats.

Tuning requires deep KQL expertise and time.

For already stretched-thin teams, spending days fine-tuning detection rules (with accuracy) is unsustainable.

It gets harder when you bring in data from non-Microsoft sources like firewalls, network tools, or custom apps.

Setting up these pipelines can take 4 to 8 weeks of engineering work, something most SOC teams simply don’t have the bandwidth for.

- Noisy data in = noisy alerts out

Sentinel ingests logs from every layer, including network, endpoints, identities, and cloud workloads. But if your data isn’t clean, normalized, or mapped correctly, you’re feeding garbage into the system. What comes out are confusing alerts, duplicates, and false positives. In threat detection, your log quality is everything. If your data fabric is messy, your security outcomes will be too.

The Cost Is More Than Alert Fatigue

False alarms don’t just wear down your security team. They can also burn through your budget. When you're ingesting terabytes of logs from various sources, data ingestion costs can escalate rapidly.

Microsoft Sentinel's pricing calculator estimates that ingesting 500 GB of data per day can cost approximately $525,888 annually. That’s a discounted rate.

While the pay-as-you-go model is appealing, without effective data management, costs can grow unnecessarily high. Many organizations end up paying to store and process redundant or low-value logs. This adds both cost and alert noise. And the problem is only growing. Log volumes are increasing at a rate of 25%+ year over year, which means costs and complexity will only continue to rise if data isn’t managed wisely. By filtering out irrelevant and duplicate logs before ingestion, you can significantly reduce expenses and improve the efficiency of your security operations.

What’s Really at Stake?

Every security leader knows the math: reduce log ingestion to cut costs and reduce alert fatigue. But what if the log you filter out holds the clue to your next breach?

For most teams, reducing log ingestion feels like a gamble with high stakes because they lack clear insights into the quality of their data. What looks irrelevant today could be the breadcrumb that helps uncover a zero-day exploit or an advanced persistent threat (APT) tomorrow. To stay ahead, teams must constantly evaluate and align their log sources with the latest threat intelligence and Indicators of Compromise (IOCs). It’s complex. It’s time-consuming. Dashboards without actionable context provide little value.

"Security teams don’t need more dashboards. They need answers. They need insights."

— Mihir Nair, Head of Architecture & Innovation at DataBahn

These answers and insights come from advanced technologies like AI.

Intercept The Next Threat With AI-Powered Log Prioritization

According to IBM’s cost of a data breach report, organizations using AI reported significantly shorter breach lifecycles, averaging only 214 days.

AI changes how Microsoft Sentinel handles data. It analyzes incoming logs and picks out the relevant ones. It filters out redundant or low-value logs.

Unlike traditional static rules, AI within Sentinel learns your environment’s normal behavior, detects anomalies, and correlates events across integrated data sources like Azure, AWS, firewalls, and custom applications. This helps Sentinel find threats hidden in huge data streams. It cuts down the noise that overwhelms security teams. AI also adds context to important logs. This helps prioritize alerts based on true risk.

In short, alert fatigue drops. Ingestion costs go down. Detection and response speed up.

Why Traditional Log Management Hampers Sentinel Performance

The conventional approach to log management struggles to scale with modern security demands as it relies on static rules and manual tuning. When unfiltered data floods Sentinel, analysts find themselves filtering out noise and managing massive volumes of logs rather than focusing on high-priority threats. Diverse log formats from different sources further complicate correlation, creating fragmented security narratives instead of cohesive threat intelligence.

Without this intelligent filtering mechanism, security teams become overwhelmed, significantly increasing false positives and alert fatigues that obscures genuine threats. This directly impacts MTTR (Mean Time to Respond), leaving security teams constantly reacting to alerts rather than proactively hunting threats.

The key to overcoming these challenges lies in effectively optimizing how data is ingested, processed, and prioritized before it ever reaches Sentinel. This is precisely where DataBahn’s AI-powered data pipeline management platform excels, delivering seamless data collection, intelligent data transformation, and log prioritization to ensure Sentinel receives only the most relevant and actionable security insights.

AI-driven Smart Log Prioritization is the Solution

Reducing Data Volume and Alert Fatigue by 50% while Optimizing Costs

By implementing intelligent log prioritization, security teams achieve what previously seemed impossible—better security visibility with less data. DataBahn's precision filtering ensures only high-quality, security-relevant data reaches Sentinel, reducing overall volume by up to 50% without creating visibility gaps. This targeted approach immediately benefits security teams by significantly reducing alert fatigues and false positives as alert volume drops by 37% and analysts can focus on genuine threats rather than endless triage.

The results extend beyond operational efficiency to significant cost savings. With built-in transformation rules, intelligent routing, and dynamic lookups, organizations can implement this solution without complex engineering efforts or security architecture overhauls. A UK-based enterprise consolidated multiple SIEMs into Sentinel using DataBahn’s intelligent log prioritization, cutting annual ingestion costs by $230,000. The solution ensured Sentinel received only security-relevant data, drastically reducing irrelevant noise and enabling analysts to swiftly identify genuine threats, significantly improving response efficiency.

Future-Proofing Your Security Operations

As threat actors deploy increasingly sophisticated techniques and data volumes continue growing at 28% year-over-year, the gap between traditional log management and security needs will only widen. Organizations implementing AI-powered log prioritization gain immediate operational benefits while building adaptive defenses for tomorrow's challenges.

This advanced technology by DataBahn creates a positive feedback loop: as analysts interact with prioritized alerts, the system continuously refines its understanding of what constitutes a genuine security signal in your specific environment. This transforms security operations from reactive alert processing to proactive threat hunting, enabling your team to focus on strategic security initiatives rather than data management.

Conclusion

The question isn't whether your organization can afford this technology—it's whether you can afford to continue without it as data volumes expand exponentially. With DataBahn’s intelligent log filtering, organizations significantly benefit by reducing alert fatigue, maximizing the potential of Microsoft Sentinel to focus on high-priority threats while minimizing unnecessary noise. After all, in modern security operations, it’s not about having more data—it's about having the right data.

Watch this webinar featuring Davide Nigro, Co-Founder of DOTDNA, as he shares how they leveraged DataBahn to significantly reduce data overload optimizing Sentinel performance and cost for one of their UK-based clients.

.avif)

Sentinel best practices: How SOCs can optimize Sentinel costs & performance

Microsoft Sentinel best practices

How SOCs can optimize Sentinel costs & performance

Enterprises and security teams are increasingly opting for Microsoft Sentinel for its comprehensive service stack, advanced threat intelligence, and automation capabilities, which facilitate faster investigations.

However, security teams are often caught off guard by the rapid escalation of data ingestion costs with Sentinel. As organizations scale their usage of Sentinel, the volume of data they ingest increases exponentially. This surge in data volume results in higher licensing costs, adding to the financial burden for enterprises. Beyond the cost implications, this data overload complicates threat identification and response, often resulting in delayed detections or missed signals entirely. Security teams find themselves constantly struggling to filter noise, manage alert volumes, and maintain operational efficiency while working to extract meaningful insights from overwhelming data streams.

The Data Overload Problem for Microsoft Sentinel

One of Sentinel's biggest strengths is its ease of integrating Microsoft data sources. SIEM operators can connect Azure, Office, and other Microsoft sources to Sentinel with ease. However, the challenge emerges when integrating non-Microsoft sources, which requires creating custom integrations and managing data pipelines.

For Sentinel to provide comprehensive security coverage and effective threat detection, all relevant security data must be routed through the platform. This requires connecting various security data sources such as firewalls, EDR/XDR, and even business applications to Sentinel, resulting in a 4 to 8 week data engineering effort that SOCs have to absorb.

On the other hand, enterprises often stop sending firewall logs to Sentinel due to the increasing log volume and costs associated with unexpected data volume spikes, which also lead to frequent breaks and issues in the data pipelines.

Then vs. Now: Key to Faster Threat Detection

Traditional data classification methods struggle to keep pace with modern security challenges. Security teams often rely on predefined rules or manual processes to categorize and prioritize data. As volumes expand exponentially, these teams find themselves ill-equipped to handle large data ingestions, resulting in critical losses of real-time insights!

DataBahn aids Sentinel deployments by streamlining data collection and ingestion with over 400 plug-and-play connectors. The platform intelligently defines data routing between basic and analytics tables while deploying strategic staging locations to efficiently publish data from third-party products into your Sentinel environment. With DataBahn’s volume reduction functions like aggregation and suppression to convert noisy logs like network traffic into manageable insights that can be loaded into Sentinel, effectively reducing both data volume and the overall time for query execution.

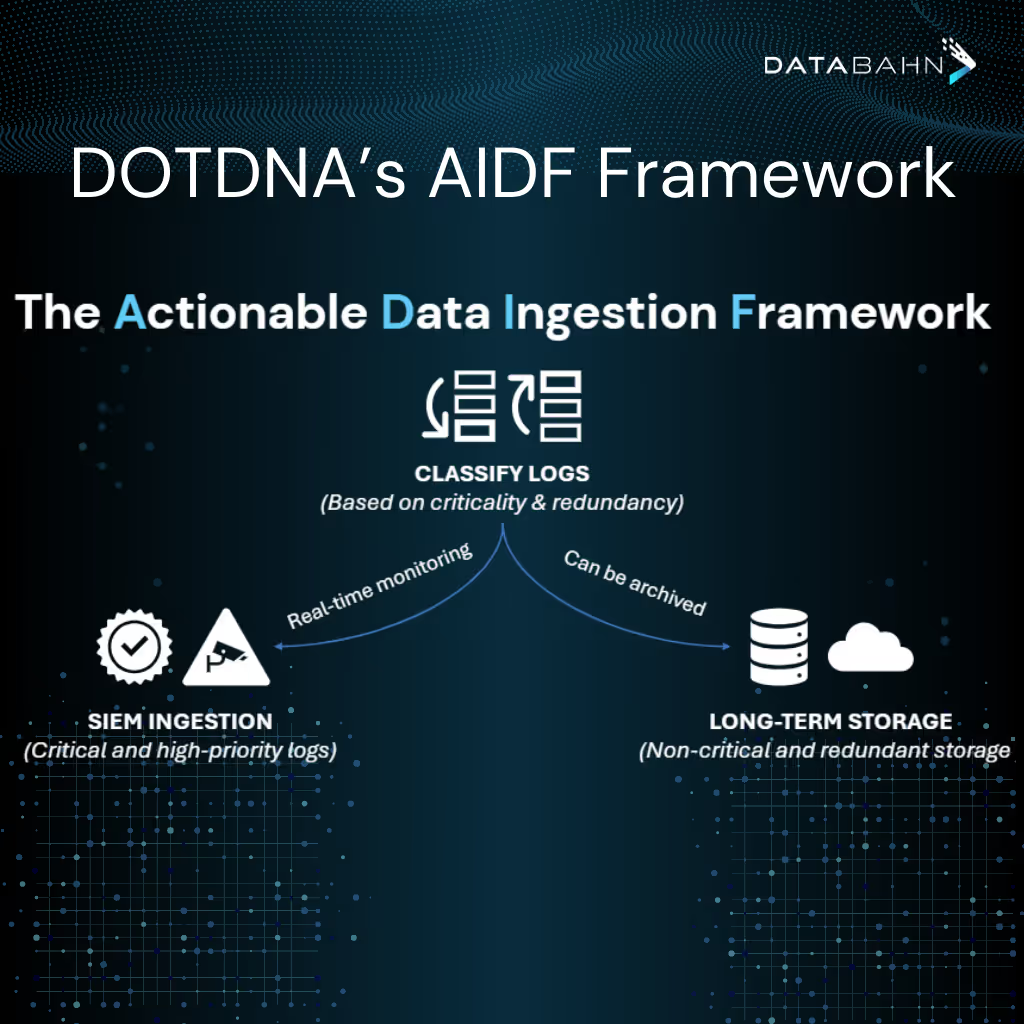

DOTDNA's AIDF Framework

DOTDNA has developed and promotes the Actionable Data Ingestion Framework (ADIF), designed to separate signal from noise by sorting your log data into two camps: critical, high-priority logs that are sent to Security Information and Event Management (SIEM) for real-time analysis and non-critical background data that can be stored long-term in cost-effectively storage.

The framework streamlines log ingestion processes, prioritizes truly critical security events, eliminates redundancy, and precisely aligns with your specific security use cases. This targeted approach ensures your CyberOps team remains focused on high-priority, actionable data, enabling enhanced threat detection and more efficient response. The result is improved operational efficiency and significant cost savings. The framework guarantees that only actionable information is processed, facilitating faster investigations and better resource allocation.

The Real Impact

Following an acquisition, a UK-based enterprise needed to consolidate multiple SIEM and SOC providers into a single Sentinel instance while effectively managing data volumes and license costs. DOTDNA implemented DataBahn's Data Fabric to architect a solution that intelligently filters, optimizes, and dynamically tags and routes only security-relevant data to Sentinel, enabling the enterprise to substantially reduce its ingestion and data storage costs.

Optimizing Log Implementation via DOTDNA: Through the strategic implementation of this architecture, DOTDNA created a targeted solution that prioritizes genuine security signals before routing to Sentinel. This precision approach reduced the firm's ingestion and data storage costs by $230,000 annually while maintaining comprehensive security visibility across all systems.

Reduced Sentinel Ingestion Costs via DataBahn’s Data Fabric: The DataBahn Data Fabric Solution precisely orchestrates data flows, extracting meaningful security insights and delivering only relevant information to your Sentinel SIEM. This strategic filtering achieves a significant reduction in data volume without compromising security visibility, maximizing both your security posture and ROI.

Conclusion

As data volumes exponentially grow, DataBahn's Data Fabric empowers security teams to shift from reactive firefighting to proactive threat hunting. Without a modern data classification framework like ADIF, security teams risk feeling overwhelmed by irrelevant data, potentially leading to missed threats and delayed responses. Take control of your security data today with a strategic approach that prioritizes actionable intelligence. By implementing a solution that delivers only the most relevant data to your security tools, transform your security operations from data overload to precision threat detection—because effective security isn't about more data, it's about the right data.

This post is based on a conversation between Davide, Founder of DOTDNA with Databahn's CPO, Aditya Sundararam. You can view this conversation on LinkedIn here.

Identity Data Management - how DataBahn solves the 'first-mile' data challenge

Identity Data Management

and how DataBahn solves the 'first-mile' identity data challenge

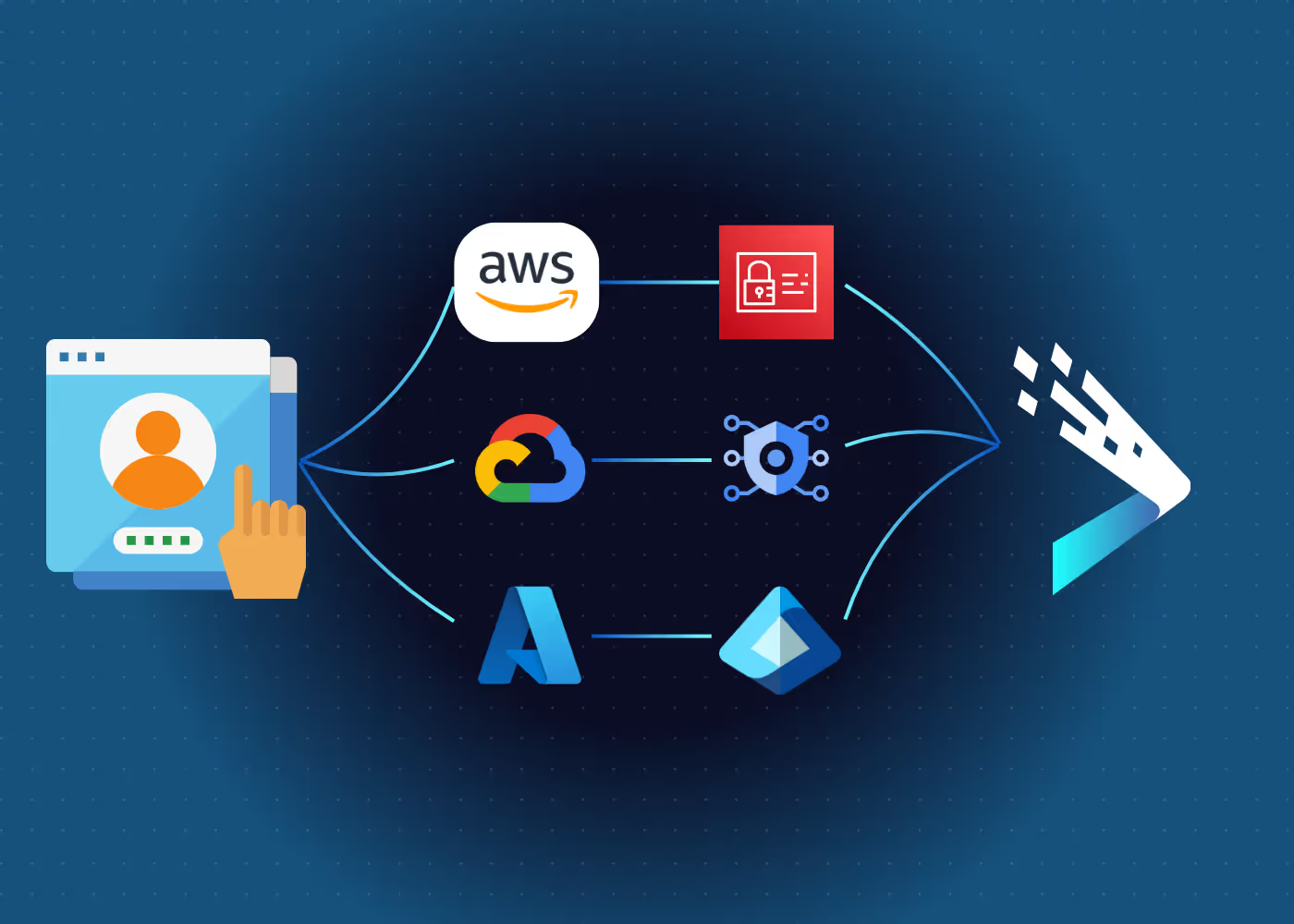

Identity management has always been about ensuring that the right people have access to the right data. With 93% of organizations experiencing two or more identity-related breaches in the past year – and with identity data fragmented and available in different silos – security teams face a broad ‘first-mile’ identity data challenge. How can they create a cohesive and comprehensive identity management strategy without unified visibility?

The Story of Identity Management and the ‘First-Mile’ data challenge

In the past, security teams would have to ensure that only a company’s employees and contractors had access to company data and to keep external individuals, unrecognized devices, and malicious applications out of organizational resources. This usually meant securing data on their own servers and restricting, monitoring, and managing access to this data.

However, two variables evolved rapidly to complicate this equation. First, several external users had to be provided access to some of this data as third-party vendors, customers, and partners needed to access enterprise data for business to continue functioning effectively. With new users coming in, existing standards and systems such as data governance, security controls, and monitoring apparatus did not evolve effectively to ensure consistency in risk exposure and data security.

Second, the explosive growth of cloud and then multi-cloud environments in digital enterprise data infrastructure has created a complex network of different identity and identity data collecting systems: HR platforms, active directories, cloud applications, on-premise solutions, and third-party tools. This makes it difficult for teams and company leadership to get a holistic view of user identities, permissions, and entitlements – without which, enforcing security policies, ensuring compliance, and managing access effectively becomes impossible.

This is the ‘First-Mile’ data challenge. How can enterprise security teams stitch together identity data from a tapestry of different sources and systems, stored in completely different formats, and enabling them to be easily leveraged for governance, auditing, and automated workflows?

How DataBahn’s Data Fabric addresses the ‘First-Mile’ data challenge

The ‘First-Mile’ data challenge can be broken down into 3 major components -

- Collecting identity data from different sources and environments into one place;

- Aggregating and normalizing this data into a consistent and accessible format; and

- Storing this data for easy reference, smart governance-focused and compliance-friendly storage.

When the first-mile identity data challenge is not solved, organizations face gaps in visibility, increase risks live privilege creep, and are vulnerable to major inefficiencies in identity lifecycle management, including provisioning and deprovisioning access.

DataBahn’s data fabric addresses the “first-mile” identity data challenge by centralizing identity, access, and entitlement data from disparate systems. To collect identity data, the platform enables seamless and instant no-code integration to add new sources of data, making it easy to connect to and onboard different sources, including raw and unstructured data from custom applications.

DataBahn also automates the parsing and normalization of identity data from different sources, pulling all the different data in one place to tell the complete story. Storing this data with the data lineage, multi-source correlation and enrichment, and the automated transformation and normalization in a data lake makes it easily accessible for analysis and compliance. With this in place, enterprises can have a unified source of truth for all identity data across platforms, on-premise systems, and external vendors in the form of an Identity Data Lake.

Benefits of a DataBahn-enabled Identity Data Lake

A DataBahn-powered centralized identity framework empowers organizations with complete visibility into who has access to what systems, ensuring that proper security policies are applied consistently across multi-cloud environments. This approach not only simplifies identity management, but also enables real-time visibility into access changes, entitlements, and third-party risks. By solving the first-mile identity challenge, a data fabric can streamline identity provisioning, enhance compliance, and ultimately, reduce the risk of security breaches in a complex, cloud-native world.

Enabling smarter auditing for Salesforce customers

Enabling smarter and more efficient analytics for Salesforce customers

As the world's leading customer relationship management (CRM) platform, Salesforce has revolutionized the way businesses manage customer relationships and has become indispensable for companies of all sizes. It powers 150,000 companies globally, including 80% of Fortune 500 corporations, and boasts a 21.8% market share in the CRM space - more than its four leading competitors combined. Salesforce becomes the central repository of essential and sensitive customer data, centralizing data from different sources. For many of their customers, Salesforce becomes the single source of truth for customer data, which includes critical transactional and business-critical data with significant security and compliance relevance.

Business leaders need to analyze transaction data for business analytics and dashboarding to enable data-driven decision-making across the organization. However, analyzing Salesforce data (or any other SaaS application) requires significant manual effort and places constraints on data and security engineering bandwidth.

We were able to act as an application data fabric and help a customer optimize Salesforce data analytics and auditing with DataBahn.

How DataBahn's application data fabric enables faster and more efficient real-time analytics Read More

Why is auditing important for Salesforce?

How are auditing capabilities used by application owners?

SaaS applications such as Salesforce have two big auditing use cases - transaction analysis for business analytics reporting and security monitoring on application access. Transaction analysis on Salesforce data is business critical and is often used to build dashboards and analytics for the C-suite to evaluate key outcomes such as sales effectiveness, demand generation, pipeline value, and potential, customer retention, customer lifetime value, etc. Aggregating data into Salesforce to track revenue generation and associated metrics, and translating them into real-time insights, drives essential data-driven decision-making and strategy for organizations. From a security perspective, it is essential to effectively manage and monitor this data and control access to it. Security teams have to monitor how these applications and the underlying data are accessed, and the prevalent AAA (Authentication, Authorization, and Accounting) framework necessitates a detailed security log and audit to protect data and proactively detect threats.

Why are native audit capabilities not enough?

While auditing capabilities are available, using them requires considerable manual effort. Data needs to be imported manually to be usable for dashboarding. Additionally, data retention windows in these applications natively are short and are not conducive for comprehensive analysis, which is required for both business analytics and security monitoring. This means that data needs to be manually exported from Salesforce or other applications (individual audit reports), cleaned up manually, and then exported to a data lake to perform analytics. Organizations can explore solutions like Databricks or Amazon Security Lake to improve visibility and data security across cloud environments.

Why is secured data retention for auditing critical?

Data stored in SaaS applications is increasingly becoming a target for malicious actors given its commercial importance. Ransomware attacks and data breaches have become more common, and a recent breach in Knowledge Bases for a major global SaaS application is a wake-up call for businesses to focus on securing the data they store in SaaS applications or export from it.

DataBahn as a solution

DataBahn acts as an application data fabric, a middleware solution. Using DataBahn, businesses can easily fork data to multiple consumers and destinations, reducing data engineering effort and ensuring that high-quality data was being sent to wherever it needed to be for both simple (storage) or higher-order functions (analytics and dashboards). With a single-click integration, DataBahn prepares SaaS application data from Salesforce or a variety of other SaaS applications - Servicenow, Okta, etc. available for business analytics and security threat detection.

Using DataBahn also helps businesses more efficiently leverage a data lake, a BI solution, or SIEM. The platform enriches data and enables transformation into different relevant formats without manual effort. Discover these and other benefits of using an Application Data Fabric to collect, manage, control, and govern data movement.