The DataBahn Blog

The latest articles, news, blogs and learnings from Databahn

Popular Posts

.png)

Strengthening Compliance and Trust with Data Lineage in Financial Services

Financial data flows are some of the most complex in any industry. Trades, transactions, positions, valuations, and reference data all pass through ETL jobs, market feeds, and risk engines before surfacing in reports. Multiply that across desks, asset classes, and jurisdictions, and tracing a single figure back to its origin becomes nearly impossible. This is why data lineage has become essential in financial services, giving institutions the ability to show how data moved and transformed across systems. So, when regulators, auditors, or even your own board ask: “Where did this number come from?” too many teams still don’t have a clear answer.

The stakes couldn’t be higher. Across frameworks like BCBS-239, the Financial Data Transparency Act, and emerging supervisory guidelines in Europe, APAC, and the Middle East, regulators are raising the bar. Banks that have adopted modern data lineage tools report 57% faster audit prep and ~40% gains in engineering productivity, yet progress remains slow — surveys show that fewer than 10% of global banks are fully compliant with BCBS-239 principles. The result is delayed audits, costly manual investigations, and growing skepticism from regulators and stakeholders alike.

The takeaway is simple: data lineage is no longer optional. It has become the foundation for compliance, risk model validation, and trust. For financial services, what data lineage means is simple: without it, compliance is reactive and fragile; with it, auditability and transparency become operational strengths.

In the rest of this blog, we’ll explore why lineage is so hard to achieve in financial services, what “good” looks like, and how modern approaches are closing the gap.

Why data lineage is so hard to achieve in Financial Services

If lineage were just “draw arrows between systems,” we’d be done. In the real world it fails because of technical edge cases and organizational friction, the stuff that makes tracing a number feel like detective work.

Siloed ownership and messy handoffs

Trade, market, reference and risk systems are often owned by separate teams with different priorities. A single calculation can touch five teams and ten systems; tracing it requires stepping across those boundaries and reconciling different glossaries and operational practices. This isn’t just technical overhead but an ownership problem that breaks automated lineage capture.

Opaque, undocumented transforms in the middle

Lineage commonly breaks inside ETL jobs, bespoke SQL, or one-off spreadsheets. Those transformation steps encode business logic that rarely gets cataloged, and regulators want to know what logic ran, who changed it, and when. That gap is one of the recurring blockers to proving traceability.

Temporal and model lineage

Financial reporting and model validation require not just “where did this value come from?” but “what did it look like at time T?” Capturing temporal snapshots and ensuring you can reconstruct the exact input set for a historical run (with schema versions, parameter sets, and market snapshots) adds another layer of complexity most lineage tools don’t handle out of the box.

Scaling lineage without runaway costs

Lineage at scale is expensive. Streaming trades, tick data and high-cardinality reference tables generate huge volumes of metadata if you try to capture full, row-level lineage. Teams need to balance fidelity, cost, and query ability, and that trade-off is a frequent operational headache.

Organizational friction and change management

Technical fixes only work when governance, process and incentives change too. Lineage rollout touches risk, finance, engineering and compliance, aligning those stakeholders, enforcing cataloging discipline, and maintaining lineage over time is a people problem as much as a technology one.

The real challenge isn’t drawing arrows between systems but designing lineage that regulators can trust, engineers can maintain, and auditors can use in real time. That’s the standard the industry is now being measured against.

What good Data Lineage looks like in finance

Great lineage in financial services doesn’t look like a prettier diagram; it feels like control. The moment an auditor asks, “Where did this number come from?” the answer should take minutes, not weeks. That’s the benchmark.

It’s continuous, not reactive.

Lineage isn’t something you piece together after an audit request. It’s captured in real time as data flows — across trades, models, and reports — so the evidence is always ready.

It’s explainable to both engineers and auditors.

Engineers should see schema versions, transformations, and dependencies. Auditors should see clear traceability and business definitions. Good lineage bridges both worlds without translation exercises.

It scales with the business.

From millions of daily trades to real-time model recalculations, lineage must capture detail without exploding into unusable metadata. That means selective fidelity, efficient storage, and fast query ability built in.

It integrates governance, not adds it later.

Lineage should carry sensitivity tags, policy markers, and glossary links as data moves. Compliance is strongest when it’s embedded upstream, not enforced after the fact.

The point is simple: an effective data lineage makes defensibility the default. It doesn’t slow down data flows or burden teams with extra work. Instead, it builds confidence that every calculation, every report, and every disclosure can be traced and trusted.

Databahn in practice: Data Lineage as part of the flow

Databahn captures lineage as data moves, not after it lands. Rather than relying on manual cataloging, the platform instruments ingestion, parsing, transformation and routing layers so every change — schema update, join, enrichment or filter — is recorded as part of normal pipeline execution. That means auditors, risk teams and engineers can reconstruct a metric, replay a run, or trace a root cause without digging through ad-hoc scripts or spreadsheets.

In production, that capture is combined with selective fidelity controls, snapshotting for time-travel, and business-friendly lineage views so traceability is both precise for engineers and usable for non-technical stakeholders.

Here are a few of the key features in Databahn’s arsenal and how they enable practical lineage:

- Seamless lineage with Highway

Every routing and transformation is tracked natively, giving a complete view from source to report without blind spots. - Real-time visibility and health monitoring

Continuous observability across pipelines detects lineage breaks, schema drift, or anomalies as they happen — not months later. - Governance with history recall and replay

Metadata tagging and audit trails preserve data history so any past report or model run can be reconstructed exactly as it appeared. - In-flight sensitive data handling

PII and regulated fields can be masked, quarantined, or tagged in motion, with those transformations recorded as part of the audit trail. - Schema drift detection and normalization

Automatic detection and normalization keep lineage consistent when upstream systems change, preventing gaps that undermine compliance.

The result is lineage that financial institutions can rely on, not just to pass regulatory checks, but to build lasting trust in their reporting and risk models. With Databahn, data lineage becomes a built-in capability, giving institutions confidence that every number can be traced, defended, and trusted.

The future of Data Lineage in finance

Lineage is moving from a compliance checkbox to a living capability. Regulators worldwide are raising expectations, from the Financial Data Transparency Act (FDTA) in the U.S., to ECB/EBA supervisory guidance in Europe, to data risk frameworks in APAC and the Middle East. Across markets, the signal is the same: traceability can’t be partial or reactive, it has to be continuous.

AI is at the center of this shift. Where teams once relied on static diagrams or manual cataloging, AI now powers:

- Automated lineage capture – extracting flows directly from SQL, ETL code, and pipeline metadata.

- Drift and anomaly detection – spotting schema changes or unusual transformations before they become audit findings.

- Metadata enrichment – linking technical fields to business definitions, tagging sensitive data, and surfacing lineage in auditor-friendly terms.

- Proactive remediation – recommending fixes, rerouting flows, or even self-healing pipelines when lineage breaks.

This is also where modern platforms like Databahn are heading. Rather than stop at automation, Databahn applies agentic AI that learns from pipelines, builds context, and acts, whether that’s updating lineage after a schema drift, tagging newly discovered sensitive fields, or ensuring audit trails stay complete.

Looking forward, financial institutions will also see exploration of immutable lineage records (using distributed ledger technologies) and standardized taxonomies to reduce cross-border compliance friction. But the trajectory is already clear: lineage is becoming real-time, AI-assisted, and regulator-ready by default, and platforms with agentic AI at their core are leading that evolution.

Conclusion: Lineage as the Foundation of Trust

Financial institutions can’t afford to treat lineage as a back-office detail. It’s become the foundation of compliance, the enabler of model validation, and the basis of trust in every reported number.

As regulators raise the bar and AI reshapes data management, the institutions that thrive will be the ones that make traceability a built-in capability, not an afterthought. That’s why modern platforms like DataBahn are designed with lineage at the core. By capturing data in motion, applying governance upstream, and leveraging agentic AI to keep pipelines audit-ready, they make defensibility the default.

If your institution is asking tougher questions about “where did this number come from?”, now is the time to strengthen your lineage strategy. Explore how Databahn can help make compliance, trust, and auditability a natural outcome of your data pipelines. Get in touch for a demo!

Cybersecurity Awareness Month 2025: Why Broken Data Pipelines Are the Biggest Risk You’re Ignoring

Every October, Cybersecurity Awareness Month rolls around with the same checklist: patch your systems, rotate your passwords, remind employees not to click sketchy links. Important, yes – but let’s be real: those are table stakes. The real risks security teams wrestle with every day aren’t in a training poster. They’re buried in sprawling data pipelines, brittle integrations, and the blind spots attackers know how to exploit.

The uncomfortable reality is this: all the awareness in the world won’t save you if your cybersecurity data pipelines are broken.

Cybersecurity doesn’t fail because attackers are too brilliant. It fails because organizations can’t move their data safely, can’t access it when needed, and can’t escape vendor lock-in while dealing with data overload. For too long, we’ve built an industry obsessed with collecting more data instead of ensuring that data can flow freely and securely through pipelines we actually control.

It’s time to embrace what many CISOs, SOC leaders, and engineers quietly admit: your security posture is only as strong as your ability to move and control your data.

The Hidden Weakness: Cybersecurity Data Pipelines

Every security team depends on pipelines, the unseen channels that collect, normalize, and route security data across tools and teams. Logs, telemetry, events, and alerts move through complex pipelines connecting endpoints, networks, SIEMs, and analytics platforms.

And yet, pipelines are treated like plumbing. Invisible until they burst. Without resilient pipelines, visibility collapses, detections fail, and incident response slows to a crawl.

Security teams drowning in data yet starved for the right insights because their pipelines were never designed for flexibility or scale. Awareness campaigns should shine a light on this blind spot. Teams must not only know how phishing works but also how their cybersecurity data pipelines work — where they’re brittle, where data is locked up, and how quickly things can unravel when data can’t move.

Data Without Movement Is Useless

Here’s a hard truth: security data at rest is as dangerous as uncollected evidence.

Storing terabytes of logs in a single system doesn’t make you safer. What matters is whether you can move security data safely when incidents strike.

- Can your SOC pivot logs into a different analytics platform when a breach unfolds?

- Can compliance teams access historical data without waiting weeks for exports?

- Can threat hunters correlate data across environments without being blocked by proprietary formats?

When data can’t move, it becomes a liability. Organizations have failed audits because they couldn’t produce accessible records. Breaches have escalated because critical logs were locked in a vendor’s silo. SOCs have burned out on alert fatigue because pipelines dumped raw, unfiltered data into their SIEM.

Movement is power. Databahn products are designed around the principle that data only has value if it’s accessible, portable, and secure in motion.

Moving Data Safely: The Real Security Priority

Everyone talks about securing endpoints, networks, and identities. But what about the routes your data travels on its way to analysts and detection systems?

The ability to move security data safely isn’t optional. It’s foundational. And “safe” doesn’t just mean encryption at rest. It means:

- Encryption in motion to protect against interception

- Role-based access control so only the right people and tools can touch sensitive data

- Audit trails that prove how and where data flowed

- Zero-trust principles applied to the pipeline itself

Think of it this way: you wouldn’t spend millions on vaults for your bank and then leave your armored trucks unguarded. Yet many organizations do exactly that, lock down storage, while neglecting the pipelines.

This is why Databahn emphasizes pipeline resilience. With solutions like Cruz, we’ve seen organizations regain control by treating data movement as a first-class security priority, not an afterthought.

A New Narrative: Control Your Data, Control Your Security

At the heart of modern cybersecurity is a simple truth: you control your narrative when you control your data.

Control means more than storage. It means knowing where your data lives, how it flows, and whether you can pivot it when threats emerge. It means refusing to accept vendor black boxes that limit visibility. It means architecting pipelines that give you freedom, not dependency.

This philosophy drives our work at Databahn. With Reef helping teams shape, access, and govern security data, and Cruz enabling flexible, resilient pipelines. Together, these approaches echo a broader industry need: break free from lock-in, reclaim control, and treat your pipeline as a strategic asset.

Security teams that control their pipelines control their destiny. Those that don’t remain one vendor outage or one pipeline failure away from disaster.

The Path Forward: Building Resilient Cybersecurity Data Pipelines

So how do we shift from fragile to resilient? It starts with mindset. Security leaders must see data pipelines not as IT plumbing but as strategic assets. That shift opens the door to several priorities:

- Embrace open architectures – Avoid tying your fate to a single vendor. Design pipelines that can route data into multiple destinations.

- Prioritize safe, audited movement – Treat data in motion with the same rigor you treat stored data. Every hop should be visible, secured, and controlled.

- Test pipeline resilience – Run drills that simulate outages, tool changes, and rerouting. If your pipeline can’t adapt in hours, you’re vulnerable.

- Balance cost with control – Sometimes the cheapest storage or analytics option comes with the highest long-term lock-in risk. Awareness must extend to financial and operational trade-offs.

We’ve seen organizations unlock resilience when they stop thinking of pipelines as background infrastructure and start thinking of them as the foundation of cybersecurity itself. This shift isn’t just about tools, it’s about mindset, architecture, and freedom.

The Real Awareness Shift We Need

As Cybersecurity Awareness Month 2025 unfolds, we’ll see the usual campaigns: don’t click suspicious links, don’t ignore updates, don’t recycle passwords. All valuable advice. But we must demand more from ourselves and from our industry.

The real awareness shift we need is this: don’t lose control of your data pipelines.

Because at the end of the day, security isn’t about awareness alone. It’s about the freedom to move, shape, and use your data whenever and wherever you need it.

Until organizations embrace that truth, attackers will always be one step ahead. But when we secure our pipelines, when we refuse lock-in, and when we prioritize safe movement of data, we turn awareness into resilience.

And that is the future cybersecurity needs.

.png)

Recap | From Chaos to Clarity Webinar

Ask any security practitioner what keeps them up at night, and it rarely comes down to a specific tool. It's usually the data itself – is it complete, trustworthy, and reaching the right place at the right time?

Pipelines are the arteries of modern security operations. They carry logs, metrics, traces, and events from every layer of the enterprise. Yet in too many organizations, those arteries are clogged, fragmented, or worse, controlled by someone else.

That was the central theme of our webinar, From Chaos to Clarity, where Allie Mellen, Principal Analyst at Forrester, and Mark Ruiz, Sr. Director of Cyber Risk and Defense at BD, joined our CPO Aditya Sundararam and our CISO Preston Wood.

Together, their perspectives cut through the noise: analysts see a market increasingly pulling practitioners into vendor-controlled ecosystems, while practitioners on the ground are fighting to regain independence and resilience.

The Analyst's Lens: Why Neutral, Open Pipelines Matter

Allie Mellen spends her days tracking how enterprises buy, deploy, and run security technologies. Her warning to practitioners is direct: control of the pipeline is slipping away.

The last five years have seen unprecedented consolidation of security tooling. SIEM vendors offer their own ingestion pipelines. Cloud hyperscalers push their monitoring and telemetry services as defaults. Endpoint and network vendors bolt on log shippers designed to funnel telemetry back into their ecosystems.

It all looks convenient at first. Why not let your SIEM vendor handle ingestion, parsing, and routing? Why not let your EDR vendor auto-forward logs into its own analytics console?

Allie's answer: because convenience is control and you're not the one holding it.

" Practitioners are looking for a tool much like with their SIEM tool where they want something that is independent or that’s kind of how they prioritize this "

— Allie Mellen, Principal Analyst, Forrester

This erosion of control has real consequences:

- Vendor lock-in: Once you're locked into a vendor's pipeline, swapping tools downstream becomes nearly impossible. Want to try a new analytics platform? Your data is tied up in proprietary formats and routing rules.

- Blind spots: Vendor-native pipelines often favor data that benefits the vendor's use cases, not the practitioners’. This creates gaps that adversaries can exploit.

- AI limitations: Every vendor now advertises "AI-driven security." But as Allie points out, AI is only as good as the data it ingests. If your pipeline is biased toward one vendor's ecosystem, you'll get AI outcomes that reflect their blind spots, not your real risk.

For Allie, the lesson is simple: net-neutral pipelines are the only way forward. Practitioners must own routing, filtering, enrichment, and forwarding decisions. They must have the ability to send data anywhere, not just where one vendor prefers.

That independence is what preserves agility, the ability to test new tools, feed new AI models, and respond to business shifts without ripping out infrastructure.

The Practitioner's Challenge: BD's Story of Data Chaos

Theory is one thing, but what happens when practitioners actually lose control of their pipelines? For Becton Dickinson (BD), a global leader in medical technology, the consequences were very real.

BD's environment spanned hospitals, labs, cloud workloads, and thousands of endpoints. Each vendor wanted to handle telemetry in its own way. SIEM agents captured one slice, endpoint tools shipped another, and cloud-native services collected the rest.

The result was unsustainable:

- Duplication: Multiple vendors forwarding the same data streams, inflating both storage and licensing costs.

- Blind spots: Medical device telemetry and custom application logs didn't fit neatly into vendor-native pipelines, leaving dangerous gaps.

- Operational friction: Pipeline management was spread across several vendor consoles, each with its own quirks and limitations.

For BD's security team, this wasn't just frustrating, it was a barrier to resilience. Analysts wasted hours chasing duplicates while important alerts slipped through unseen. Costs skyrocketed, and experimentation with new analytics tools or AI models became impossible.

Mark Ruiz, Sr. Director of Cyber Risk and Defense at BD, knew something had to change.

With Databahn, BD rebuilt its pipeline on neutral ground:

- Universal ingestion: Any source from medical device logs to SaaS APIs could be onboarded.

- Scalable filtering and enrichment: Data was cleaned and streamlined before hitting downstream systems, reducing noise and cost.

- Flexible routing: The same telemetry could be sent simultaneously to Splunk, a data lake, and an AI model without duplication.

- Practitioner ownership: BD controlled the pipeline itself, free from vendor-imposed limits.

The benefits were immediate. SIEM ingestion costs dropped sharply, blind spots were closed, and the team finally had room to innovate without re-architecting infrastructure every time.

" We were able within about eight, maybe ten weeks consolidate all of those instances into one Sentinel instance in this case, and it allowed us to just unify kind of our visibility across our organization."

— Mark Ruiz, Sr. Director, Cyber Risk and Defense, BD

Where Analysts and Practitioners Agree

What's striking about Allie's analyst perspective and Mark's practitioner experience is how closely they align.

Both argue that convenience isn't resilience. Vendor-native pipelines may be easy up front, but they lock teams into rigid, high-cost, and blind-spot-heavy futures.

Both stress that pipeline independence is fundamental. Whether you're defending against advanced threats, piloting AI-driven detection, or consolidating tools, success depends on owning your telemetry flow.

And both highlight that resilience doesn't live in downstream tools. A world-class SIEM or an advanced AI model can only be as good as the data pipeline feeding it.

This alignment between market analysis and hands-on reality underscores a critical shift: pipelines aren't plumbing anymore. They're infrastructure.

The Databahn Perspective

For Databahn, this principle of independence isn't an afterthought—it's the foundation of the approach.

Preston Wood, CSO at Databahn, frames it this way:

"We don't see pipelines as just tools. We see them as infrastructure. The same way your network fabric is neutral, your data pipeline should be neutral. That's what gives practitioners control of their narrative."

— Preston Wood, CSO, Databahn

This neutrality is what allows pipelines to stay future-proof. As AI becomes embedded in security operations, pipelines must be capable of enriching, labeling, and distributing telemetry in ways that maximize model performance. That means staying independent of vendor constraints.

Aditya Sundararam, CPO at Databahn, emphasizes this future orientation: building pipelines today that are AI-ready by design, so practitioners can plug in new models, test new approaches, and adapt without disruption.

Own the Pipeline, Own the Outcome

For security practitioners, the lesson couldn't be clearer: the pipeline is no longer just background infrastructure. It's the control point for your entire security program.

Analysts like Allie warn that vendor lock-in erodes practitioner control. Practitioners like Mark show how independence restores visibility, reduces costs, and builds resilience. And Databahn's vision underscores that independence isn't just tactical, it's strategic.

So the question for every practitioner is this: who controls your pipeline today?

If the answer is your vendor, you've already lost ground. If the answer is you, then you have the agility to adapt, the visibility to defend, and the resilience to thrive.

In security, tools will come and go. But the pipeline is forever. Own it, or be owned by it.

.png)

MITRE under ATT&CK: Rethinking cybersecurity's gold standard

The MITRE ATT&CK Evaluations have entered unexpected choppy waters. Several of the cybersecurity industry’s largest platform vendors have opted out this year, each using the same language about “resource prioritization” and “customer focus”. When multiple leaders step back at once, it raises some hard questions. Is this really about resourcing, or about avoiding scrutiny? Or is it the slow unraveling of a bellwether and much-loved institution?

Speculation is rife; some suggest these giants are wary of being outshone by newer challengers; other believe it reflects uncertainty inside MITRE itself. Whatever the case, the exits have forced a reckoning: does ATT&CK still matter? At Databahn, we believe it does – but only if it evolves into something greater than it is today.

What is MITRE ATT&CK and why it matters

MITRE ATT&CK was born from a simple idea: if we could catalog the real tactics and techniques adversaries use in the wild, defenders everywhere could share a common language and learn from each other. Over time, ATT&CK became more than a knowledge base – it became the Rosetta Stone of modern cybersecurity.

The Evaluations program extended that vision. Instead of relying on vendor claims or glossy datasheets, enterprises could see how different tools performed against emulated threat actors, step by step. MITRE never crowned winners or losers; it simply published raw results, offering a level playing field for interpretation.

That transparency mattered. In an industry awash with noise and marketing spin, ATT&CK Evaluations became one of the few neutral signals that CISOs, SOC leaders, and practitioners could trust. For many, it was less about perfect scores and more about seeing how a tool behaved under pressure – and whether it aligned with their own threat model.

The Misuse and the Criticisms

For years, ATT&CK Evaluations were one of the few bright spots in an industry crowded with vendor claims. CISOs could point to them as neutral, transparent – and at least in theory – immune from spin. In a market that rarely offers apples-to-apples comparisons, ATT&CK stood out as a genuine attempt at objectivity. In defiance of the tragedy of the commons, it remained neutral, with all revenues routed towards doing more research to improve public safety.

The absences of some of the industry’s largest vendors have sparked a firestorm of commentary. While their detractors are skeptical about their near-identical statements and suggest that this was strategic, it raises questions at a time when criticisms of MITRE ATT&CK Evaluations were also growing more strident, pointing to how results were interpreted – or rather, misinterpreted. While MITRE doesn’t crown champions, hand out trophies, or assign grades, vendors have been quick to award themselves with imagined laurels. Raw detection logs are taken and twisted into “best-in-class" coverage, missing the nuance that matters most: whether detections were actionable, whether alerts drowned analysts in noise, and whether the configuration mirrored a real production environment.

The gap became even more stark when evaluation results didn’t line up with enterprise reality. CISOs would see a tool perform flawlessly on paper, only to watch it miss basic detections or drown SOCs with false positives. The disconnect wasn’t the fault of the ATT&CK framework itself, which didn’t intend to simulate the full messiness of a live environment. But this gave critics the ammunition to question whether the program had lost its value.

And of course, there is the Damocles’ sword of AI. In a time of dynamic threats being spun up and vulnerabilities exploited in days, do one-time evaluations of solutions really have the same effectiveness? In short, what was designed to be a transparent reference point too often CISOs and SOC teams were left to sift through competing storylines–especially in an ecosystem where AI-powered speed rendered static frameworks less effective.

Making the gold standard shine again

For all its flaws and frustrations, ATT&CK remains the closest thing cybersecurity has to a gold standard. No other program managed to establish such a widely accepted, openly accessible benchmark for adversary behavior. For CISOs and SOC leaders, it has become the shared map that allows them to compare tools, align on tactics, and measure their own defenses against a common framework.

Critics are right to point out the imperfections in MITRE Evaluations. But in a non-deterministic security landscape – where two identical attacks can play out in wildly different ways – imperfection is inevitable. What makes ATT&CK different is that it provides something few others do: neutrality. Unlike vendor-run bakeoffs, pay-to-play analyst reports, or carefully curated customer case studies, ATT&CK offers a transparent record of what happened, when, and how. No trophies, no hidden methodology, no commercial bias. Just data.

That’s why, even as some major players step away, ATT&CK still matters. It is not a scoreboard and never should have been treated as one. It is a mirror that shows us where we stand, warts and all. And when that mirror is held up regularly, it keeps vendors honest, challengers motivated, and buyers better informed. And most importantly, it keeps us all safer and better prepared for the threats we face today.

Yet, holding up a mirror once a year is no longer enough. The pace of attacks has accelerated, AI is transforming both offense and defense, and enterprises can’t afford to wait for annual snapshots. If ATT&CK is to remain the industry’s north star, it must evolve into something more dynamic – capable of keeping pace with today’s threats and tomorrow’s innovations.

From annual tests to constant vigilance

If ATT&CK is to remain the north star of cybersecurity, it cannot stay frozen in its current form. Annual, one-off evaluations feel outdated in today’s fast-paced threat landscape. The need is to test enterprise deployments, not security tools in sterilized conditions.

In one large-scale study, researchers mapped enterprise deployments against the same MITRE ATT&CK techniques used in evaluations. The results were stark: despite high vendor scores in controlled settings, only 2% of adversary behaviors were consistently detected in product. That kind of drop-off exposes a fundamental gap – not in MITRE’s framework itself, but in how it is being used.

The future of ATT&CK must be continuous. Enterprises should be leveraging the framework to test their systems, because that is what is being attacked and under threat. These tests should be a consistent process of stress-testing, learning, and improving. Organizations should be able to validate their security posture against MITRE techniques regularly – with results that reflect live data, not just laboratory conditions.

This vision is no longer theoretical. Advances in data pipeline management and automation now make it possible to run constant, low friction checks on how telemetry maps to ATT&CK. At Databahn, we’ve designed our platform to enable exactly this: continuous visibility into coverage, blind spots, and gaps in real-world environments. By aligning security data flows directly with ATT&CK, we help enterprises move from static validation to dynamic, always-on confidence.

Vendors shouldn’t abandon MITRE ATT&CK Evaluations; they should make it a module in their products, to enable enterprises to consistently evaluate their security posture. This will ensure that enterprises can keep better pace with an era of relentless attack and rapid innovation. The value of ATT&CK was never in a single set of results – but in the discipline of testing, interpreting, and improving, again and again.

Databricks + Databahn: The Next Era of Data Intelligence for Cybersecurity

In cybersecurity today, the most precious resource is not the latest tool or threat feed – it is intelligence. And this intelligence is only as strong as the data foundation that creates it from the petabytes of security telemetry drowning enterprises today. Security operation centers (SOCs) worldwide are being asked to defend at AI speed, while still struggling to navigate a tidal wave of logs, redundant alerts, and fragmented systems.

This is less about a product release and more about a movement—a movement that places data at the foundation for agentic, AI-powered cybersecurity. It signals a shift in how the industry must think about security data: not as exhaust to be stored or queried, but as a living fabric that can be structured, enriched, and made ready for AI-native defense.

At DataBahn, we are proud to partner with Databricks and fully integrate with their technology. Together, we are helping enterprises transition from reactive log management to proactive security intelligence, transforming fragmented telemetry into trusted, actionable insights at scale.

From Data Overload to Data Intelligence

For decades, the industry’s instinct has been to capture more data. Every sensor, every cloud workload, and every application heartbeat is shipped to a SIEM or stored in a data lake for later investigation. The assumption was simple: more data equals better defense. But in practice, this approach has created more problems for enterprises.

Enterprises now face terabytes of daily data ingestion, much of which is repetitive, irrelevant, or misaligned with actual detection needs. This data also comes in different formats from hundreds and thousands of devices, and security tools and systems are overwhelmed by noise. Analysts are left searching for needles in haystacks, while adversaries increasingly leverage AI to strike more quickly and precisely.

What’s needed is not just scale, but intelligence: the ability to collect vast volumes of security data and to understand, prioritize, analyze, and act on it while it is in motion. Databricks provides the scale and flexibility to unify massive volumes of telemetry. DataBahn brings the data collection, in-motion enrichment, and AI-powered tiering and segmenting that transform raw telemetry into actionable insights.

Next-Gen Security Data Infrastructure Platform

Databricks is the foundation for operationalizing AI at scale in modern cyber defense, enabling faster threat detection, investigation, and response. It enables the consolidation of all security, IT, and business data into a single, governed Data Intelligence Platform – which becomes a ready dataset for AI to operate on. When you combine this with DataBahn, you create an AI-ready data ecosystem that spans from source to destination and across the data lifecycle.

DataBahn sits on the left of Databricks, ensuring decoupled and flexible log and data ingestion into downstream SIEM solutions and Databricks. It leverages Agentic AI for data flows, automating the ingestion, parsing, normalization, enrichment, and schema drift handling of security telemetry across hundreds of formats. No more brittle connectors, no more manual rework when schemas drift. With AI-powered tagging, tracking, and tiering, you ensure that the correct data goes to the right place and optimize your SIEM license costs.

Agentic AI is leveraged to deliver insights and intelligence not just to data at rest, stored in Databricks, but also in flight via a persistent knowledge layer. Analysts can ask real questions in natural language and get contextual answers instantly, without writing queries or waiting on downstream indexes. Security tools and AI applications can access this layer to reduce time-to-insight and MTTR even more.

The solution brings the data intelligence vision tangible for security and is in sync with DataBahn’s vision for Headless Cyber Architecture. This is an ecosystem where enterprises control their own data in Databricks, and security tools (such as the SIEM) do less ingestion and more detection. Your Databricks security data storage becomes the source of truth.

Making the Vision Real for Enterprises

Security leaders don’t need another dashboard or more security tools. They need their teams to move faster and with confidence. For that, they need their data to be reliable, contextual, and usable – whether the task is threat hunting, compliance, or powering a new generation of AI-powered workflows.

By combining Databricks’ unified platform with DataBahn’s agentic AI pipeline, enterprises can:

- Cut through noise at the source: Filter out low-value telemetry before it ever clogs storage or analytics pipelines, preserving only what matters for detection and investigation.

- Enrich with context automatically: Map events against frameworks such as MITRE ATT&CK, tag sensitive data for governance, and unify signals across IT, cloud, and OT environments.

- Accelerate time to insight: Move away from waiting hours for query results to getting contextual answers in seconds, through natural language interaction with the data itself. Get insights from data in motion or stored/retained data, kept in AI-friendly structures for investigation.

- Power AI-native security apps: Feed consistent, high-fidelity telemetry into Databricks models and downstream security tools, enabling generative AI to act with confidence and explainability. Leverage Reef for insight-rich data to reduce compute costs and improve response times.

For SOC teams, this means less time spent triaging irrelevant alerts and more time preventing breaches. For CISOs, this means greater visibility and control across the entire enterprise, while empowering their teams to achieve more at lower costs. For the business, it means security and data ownership that scale with innovation.

A Partnership Built for the Future

Databricks’ Data Intelligence for Cybersecurity brings the scale and governance enterprises need to unify their data at rest as a central destination. With DataBahn, data arrives in Databricks already optimized – AI-powered pipelines make it usable, insightful, and actionable in real time.

This partnership goes beyond integration – it lays the foundation for a new era of cybersecurity, where data shifts from liability to advantage in unlocking generative AI for defense. Together, Databricks’ platform and DataBahn’s intelligence layer give security teams the clarity, speed, and agility they need against today’s evolving threats.

What Comes Next

The launch of Data Intelligence for Cybersecurity is only the beginning. Together, Databricks and DataBahn are helping enterprises reimagine how they collect, manage, secure, and leverage data.

The vision is clear – a platform that is:

- Lightweight and modular – collect data from any source effortlessly, including AI-powered integration for custom applications and microservices.

- Broadly integrated – DataBahn comes with a library of collectors for aggregating and transforming telemetry, while Databricks creates a unified data storage for the telemetry.

- Intelligently optimized – remove 60-80% of non-security-relevant data and keep it out of your SIEM to save on costs; eventually, make your SIEM work as a detection engine on top of Databricks as a storage layer for all security telemetry.

- Enrichment-first – apply threat intel, identify, geospatial data, and other contextual information before forwarding data into Databricks and your SIEM to make analysis and investigations faster and smarter.

- AI-ready – feeding clean, contextualized, and enriched data into Databricks to be fed into your models and your AI applications – for metrics and richer insights, they can also leverage Reef to save on compute.

This is the next era of security – and it starts with data. Together, Databricks and DataBahn provide an AI-native foundation in which telemetry is self-optimized and stored in a way to make insights instantly accessible. Data is turned into intelligence, and intelligence is turned into action.

.png)

How to Optimize Sensitive Data Discovery in telemetry and pipelines

Every enterprise handles sensitive data: customer personally identifiable information (PII), employee credentials, financial records, and health information. This is the information SOCs are created to protect, and what hackers are looking to acquire when they attack enterprise systems. Yet, much of it still flows through enterprise networks and telemetry systems in cleartext – unhashed, unmasked, and unencrypted. For attackers, that’s gold. Sensitive data in cleartext complicates detection, increases the attack surface, and exposes organizations to devastating breaches and compliance failures.

When Uber left plaintext secrets and access keys in logs, attackers walked straight in. Equifax’s breach exposed personal records of 147 million people, fueled by poor handling of sensitive data. These aren’t isolated mistakes – they’re symptoms of a systemic failure: enterprises don’t know when and where sensitive data is moving through their systems. Security leaders who rely on firewalls and SIEMs to cover them, but if PII is leaking undetected in logs, you’ve already lost half the battle.

That’s where sensitive data discovery comes in. By detecting and controlling sensitive data in motion – before it spreads – you can dramatically reduce risk, stop attackers from weaponizing leaks, and restrict lateral movement attacks. It also protects enterprises from compliance liability by establishing a more stable, leak-proof foundation for storing sensitive and private customer data. Customers are also more likely to trust businesses that don’t lose their private data to harmful or malicious actors.

The Basics of Sensitive Data Discovery

Sensitive data discovery is the process of identifying, classifying, and protecting sensitive information – such as PII, protected health information (PHI), payment data, and credentials – as it flows across enterprise data systems.

Traditionally, enterprises focus discovery efforts on data at rest (databases, cloud storage, file servers). While critical, this misses the reality of today’s SOC: sensitive data often appears in transit, embedded in logs, telemetry, and application traces. And when attackers access data pipelines, they can find credentials to access more sensitive systems as well.

Examples include:

- Cleartext credentials logged by applications

- Social security information or credit card data surfacing in customer service logs

- API keys and tokens hardcoded or printed into developer logs

These fragments may seem small, but to attackers, they are the keys to the kingdom. Once inside, they can pivot through systems, exfiltrate data, or escalate privileges.

Discovery ensures that these signals are flagged, masked, or quarantined before they reach SIEMs, data lakes, or external tools. It provides SOC teams with visibility into where sensitive data lives in-flight, helping them enforce compliance (GDPR, PCI DSS, HIPAA), while improving detection quality. Sensitive data discovery is about finding your secrets where they might be exposed before adversaries do.

Why is sensitive data discovery so critical today?

Preventing catastrophic breaches

Uber’s 2022 breach had its root cause traced back to credentials sitting in logs without encryption. Equifax’s 2017 breach, one of the largest in history, exposed PII that was transmitted and secured insecurely. In both cases, attackers didn’t need zero-days – they just needed access to mishandled sensitive data.

Discovery reduces this risk by flagging and quarantining sensitive data before it becomes an attacker’s entry point.

Reducing SOC complexity

Sensitive data in logs slows and encumbers detection workflows. A single leaked API key can generate thousands of false positive alerts if not filtered. By detecting and masking PII upstream, SOCs reduce noise and focus on real threats.

Enabling compliance at scale

Regulations like PCI DSS and GDPR require organizations to prevent sensitive data leakage. Discovery ensures that data pipelines enforce compliance automatically – masking credit card numbers, hashing identifiers, and tagging logs for audit purposes.

Accelerating investigations

When breaches happen, forensic teams need to know: did sensitive data move? Where? How much? Discovery provides metadata and lineage to answer these questions instantly, cutting investigation times from weeks to hours.

Sensitive data discovery isn’t just compliance hygiene. It directly impacts threat detection, SOC efficiency, and breach prevention. Without it, you’re blind to one of the most common (and damaging attack vectors in the enterprise.

Challenges & Common Pitfalls

Despite its importance, most enterprises struggle with identifying sensitive data.

Blind spots in telemetry

Many organizations lack the resources to monitor their telemetry streams closely. Yet, sensitive data leaks happen in-flight, where logs cross applications, endpoints, and cloud services.

Reliance on brittle rules

Regex filters and static rules can catch simple patterns but miss variations. Attackers exploit this, encoding or fragmenting sensitive data to bypass detection.

False positives and alert fatigue

Overly broad rules flag benign data as sensitive, overwhelming analysts and hindering their ability to analyze data effectively. SOCs end up tuning out alerts – the very ones that could signal a real leak.

Lack of source-specific controls

Different log sources behave differently. A developer log might accidentally capture secrets, while an authentication system might emit password hashes. Treating all sources the same creates blind spots.

Manual effort and scale

Traditional discovery depends on engineers writing regex and manually classifying data. With terabytes of telemetry per day, this is unsustainable. Sensitive data moves faster than human teams can keep up.

This results in enterprises either over collecting telemetry, flooding SIEMs with sensitive data they can’t detect or protect with static rules, or under collect, missing critical signals. Either way, adversaries exploit the cracks.

Solutions and Best Practices

The way forward is not more manual regex or brittle SIEM rules. These are reactive, error-prone, and impossible to scale.

A data pipeline-first approach

Sensitive data discovery works best when built directly into the security data pipeline – the layer that collects, parses, and routes telemetry across the enterprise.

Best practices include:

- In-flight detection

Identify sensitive data as it moves through the pipeline. Flag credit card numbers, SSNs, API keys, and other identifiers in real time, before they land in SIEMs or storage. - Automated masking and quarantine

Apply configurable rules to mask, hash, or quarantine sensitive data at the source. This ensures SOCs don’t accidentally store cleartext secrets while preserving the ability to investigate. - Source-specific rules

Build edge intelligence. Lightweight agents at the point of collection should apply rules tuned for each source type to avoid PII moving without protection anywhere in the system. - AI-powered detection

Static rules can’t keep pace. AI models can learn what PII looks like – even in novel formats – and flag it automatically. This drastically reduces false positives while improving coverage. - Pattern-friendly configurability

Security teams should be able to define their own detection logic for sensitive data types. The pipeline should combine human-configured patterns with AI-powered discovery. - Telemetry observability

Treat insensitive data detection as part of pipeline health. SOCs require dashboards to view what sensitive data was flagged, masked, or quarantined, along with its lineage for audit purposes.

When discovery is embedded in the pipeline, sensitive data doesn’t slip downstream. It’s caught, contained, and controlled at the source.

How DataBahn can help

DataBahn is redefining how enterprises manage security data, making sensitive data discovery a core function of the pipeline.

At the platform level, DataBahn enables enterprises to:

- Identify sensitive information in-flight and in-transit across pipelines – before it reaches SIEMs, lakes, or external systems.

- Apply source-specific rules at edge collection, using lightweight agents to protect, mask, and quarantine sensitive data from end to end.

- Leverage AI-powered, pattern-friendly detection to automatically recognize and learn what PII looks like, improving accuracy over time.

This approach turns sensitive data protection from an afterthought into a built-in control. Instead of relying on SIEM rules or downstream DLP tools, DataBahn ensures sensitive data is identified, governed, and secured at the earliest possible stage – when it enters the pipeline.

Conclusion

Sensitive data leaks aren’t hypothetical; they’re happening today. Uber’s plaintext secrets and Equifax’s exposed PII – these were avoidable, and they demonstrate the dangers of storing cleartext sensitive data in logs.

For attackers, one leaked credential is enough to breach an enterprise. For regulators, one exposed SSN is enough to trigger fines and lawsuits. For customers, even one mishandled record can be enough to erode trust permanently.

Relying on manual rules and hope is no longer acceptable. Enterprises need sensitive data discovery embedded in their pipelines – automated, AI-powered, and source-aware. That’s the only way to reduce risk, meet compliance, and give SOCs the control they desperately need.

Sensitive data discovery is not a nice-to-have. It’s the difference between resilience and breach.

AI-powered breaches: AI is turning Telemetry into an attack surface

A wake-up call from Salesforce

The recent Salesforce breach should serve as a wake-up call for every CISO and CTO. In this incident, AI bots armed with stolen credentials stole massive amounts of data using AI bots and stolen credentials to move laterally in ways legacy defenses weren’t prepared to stop. The lesson is clear: attackers are no longer just human adversaries – they’re deploying agentic AI to move with scale, speed, and persistence.

This isn’t an isolated case. Threat actors are now leveraging AI to weaponize the weakest links in enterprise infrastructure, and one of the most vulnerable surfaces is telemetry data in motion. Unlike hardened data lakes and encrypted storage, telemetry pipelines often carry credentials, tokens, PII, and system context in plaintext or poorly secured formats. These streams, replicated across brokers, collectors, and SIEMs, are ripe for AI-powered exploitation.

The stakes are simple: if telemetry remains unguarded, AI will find and weaponize what you missed.

Telemetry in the age of AI: What it is and what it hides

Telemetry – logs, traces, metrics, and events data – has been treated as operational “exhaust” in digital infrastructure for the last 2-3 decades. It flows continuously from SaaS apps, cloud services, microservices, IoT/OT devices, and security tools into SIEMs, observability platforms, and data lakes. But in practice, telemetry is:

- High volume and heterogeneous: pulled from thousands of sources across different ecosystems, raw telemetry comes in a variety of different formats that are very contextual and difficult to parse and normalize

- Loosely governed: less rigorously controlled then data at rest; often duplicated, unprocessed before being moved, and destined for a variety of different tools and destinations

- Widely replicated: stored in caches, queues, and temporary buffers multiple times en route

Critically, telemetry often contains secrets. API keys, OAuth tokens, session IDs, email addresses, and even plaintext passwords leak into logs and traces, Despite OWASP (Open Worldwide Application Security Project) and OTel (OpenTelemetry) guidance to sanitize at the source, most organizations still rely on downstream scrubbing. By then, the sensitive data has already transited multiple hops. This happens because security teams view telemetry as “ops noise” rather than an active attack surface. If a bot scraped your telemetry flow for an hour, what credentials or secrets would it find?

Why this matters now: AI has changed the cost curve

Three developments make telemetry a prime target today:

AI-assisted breaches are real

The recent Salesforce breach showed that attackers no longer rely on manual recon or brute force. With AI bots, adversaries chain stolen credentials with automated discovery to expand their foothold. What once took weeks of trial-and-error can now be scripted and executed in minutes.

AI misuse is scaling faster than expected

“Vibe hacking” would be laughable if it wasn’t a serious threat. Anthropic recently disclosed that they had detected and investigated a malicious actor that had used Claude to generate exploit code, reverse engineer vulnerabilities, and accelerate intrusion workflows. What’s chilling is not just the capability – but the automation of persistence. AI agents don’t get tired, don’t miss details, and can operate continuously across targets.

Secrets in telemetry are the low-hanging fruit

Credential theft remains the #1 initial action in breaches. Now, AI makes it trivial to scrape secrets from sprawling logs, correlate them across systems, and weaponize them against SaaS, cloud, and OT infrastructure. Unlike data at rest, data in motion is transient, poorly governed, and often invisible or to the left of traditional SIEM rules.

The takeaway? Attackers are combining stolen credentials from telemetry with AI automation to multiply their effectiveness.

Where enterprises get burned – common challenges

Most enterprises secure data at rest but leave data in motion exposed. The Salesforce incident highlights this blind spot: the weak link wasn’t encrypted storage but credentials exposed in telemetry pipelines. Common failure patterns include:

- Over-collection mindset:

Shipping everything “just in case”, including sensitive fields like auth headers or query payloads. - Downstream-only reaction:

Scrubbing secrets inside SIEMs – after they’ve crossed multiple hops and have left duplicates in various caches. - Schema drift:

New field names can bypass static masking rules, silently re-exposing secrets. - Broad permissions:

Message brokers and collectors – and AI bots and agents – often run with wide service accounts, becoming perfect targets. - Observability != security:

Telemetry platforms optimize for visibility, not policy enforcement. - No pipeline observability:

Teams monitor telemetry pipelines like plumbing, focusing on throughput but ignoring sensitive-field policy violations or policy gaps. - Incident blind spots: When breaches occur, teams can’t trace which sensitive data moved where – delaying containment and raising compliance risk.

Securing data in motion: Principles & Best Practices

If data in motion is now the crown jewel target, the defense must match. A modern telemetry security strategy requires:

- Minimize at the edge:

- Default-deny sensitive collection. Drop or hash secrets at the source before the first hop.

- Apply OWASP and OpenTelemetry guidance for logging hygiene.

- Policy as code:

- Codify collection, redaction, routing, and retention rules as version-controlled policy.

- Enforce peer review for changes that affect sensitive fields.

- Drift-aware redaction:

- Use AI-driven schema detection to catch new fields and apply auto-masking

- Encrypt every hop:

- mTLS (Mutual Transport Layer Security) between collectors, queues, and processors

- Short-lived credentials and isolated broker permissions

- Sensitivity-aware routing:

- Segment flows: send only detection-relevant logs to SIEM, archive the rest in low-cost storage

- ATT&CK-aligned visibility:

- Map log sources to MITRE ATT&CK techniques; onboard what improves coverage, not just volume.

- Pipeline observability:

- Monitor for unmasked fields, anomalous routing, or unexpected destinations.

- Secret hygiene:

- Combine CI/CD secret scanning with real-time telemetry scanning

- Automate token revocation and rotation when leaks occur

- Simulate the AI adversary:

- Run tabletop exercises assuming an AI bot is scraping your pipelines

- Identify what secrets it would find, and see how fast you can revoke them

DataBahn: Purpose-built for Data-in-motion Security

DataBahn was designed for exactly this use-case: building secure, reliable, resilient, and intelligent telemetry pipelines. Identifying, isolating, and quarantining PII is a feature the platform was built around.

- At the source: Smart Edge and its lightweight agents or phantom collectors allow for the dropping or masking of sensitive fields at the source. It also provides local encryption, anomaly detection, and silent-device monitoring.

- In transit: Cruz learns schemas to detect and prevent drift; automates the masking of PII data; learns what data is sensitive and proactively catches it

This reduces the likelihood of breach, makes it harder for bad actors to access credentials and move laterally, and elevates telemetry from a low-hanging fruit to a secure data exchange.

Conclusion: Telemetry is the new point to defend

The Salesforce breach demonstrated that attackers don’t need to brute-force their way into your systems—they just have to extract what you’ve already leaked within your data networks. Anthropic’s disclosure of Claude misuse highlights that this problem will grow faster than defenders are capable of handling or are prepared for.

The message is clear: AI has collapsed the time between leak and loss. Enterprises must treat telemetry as sensitive, secure it in motion, and monitor pipelines as rigorously as they monitor applications.

DataBahn offers a 30-minute Data-in-Motion Risk Review. In that session, we’ll map your top telemetry sources to ATT&CK, highlight redaction gaps, and propose a 60-day hardening plan tailored to your SIEM and AI roadmap.

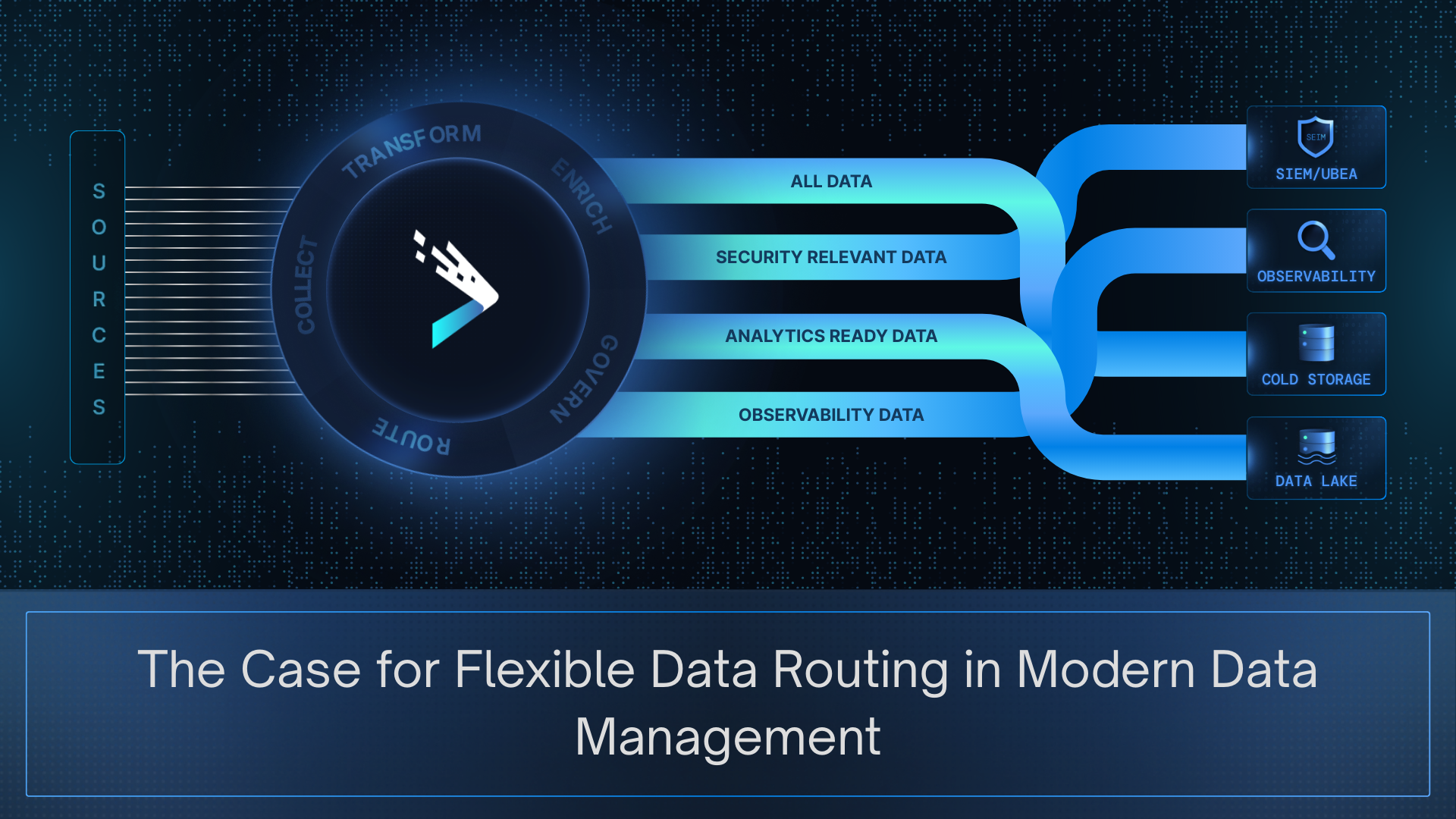

The Case for Flexible Data Routing in Modern Data Management

Most organizations no longer struggle to collect data. They struggle to deliver it where it creates value. As analytics, security, compliance, and AI teams multiply their toolsets, a tangled web of point-to-point pipelines and duplicate feeds has become the limiting factor. Industry studies report that data teams spend 20–40% of their time on data management pipeline maintenance, and rework. That maintenance tax slows innovation, increases costs, and undermines the reliability of analytics.

When routing is elevated into the pipeline layer with flexibility and control, this calculus changes. Instead of treating routing as plumbing, enterprises can deliver the right data, in the right shape, to the right destination, at the right cost. This blog explores why flexible data routing and data management matters now, common pitfalls of legacy approaches, and how to design architectures that scale with analytics and AI.

Why Traditional Data Routing Holds Enterprises Back

For years, enterprises relied on simple, point-to-point integrations: a connector from each source to each destination. That worked when data mostly flowed into a warehouse or SIEM. But in today’s multi-tool, multi-cloud environments, these approaches create more problems than they solve — fragility, inefficiency, unnecessary risk, and operational overhead.

Pipeline sprawl

Every new destination requires another connector, script, or rule. Over time, organizations maintain dozens of brittle pipelines with overlapping logic. Each change introduces complexity, and troubleshooting becomes slow and resource intensive. Scaling up only multiplies the problem.

Data duplication and inflated costs

Without centralized data routing, the same stream is often ingested separately by multiple platforms. For example, authentication logs might flow to a SIEM, an observability tool, and a data lake independently. This duplication inflates ingestion and storage costs, while complicating governance and version control.

Vendor lock-in

Some enterprises route all data into a single tool, like a SIEM or warehouse, and then export subsets elsewhere. This makes the tool a de facto “traffic controller,” even though it was never designed for that role. The result: higher switching costs, dependency risks, and reduced flexibility when strategies evolve.

Compliance blind spots

Different destinations demand different treatments of sensitive data. Without flexible data routing, fields like user IDs or IP addresses may be inconsistently masked or exposed. That inconsistency increases compliance risks and complicates audits.

Engineering overhead

Maintaining a patchwork of pipelines consumes valuable engineering time. Teams spend hours fixing schema drift, rewriting scripts, or duplicating work for each new destination. That effort diverts resources from critical operations and delays analytics delivery.

The outcome is a rigid, fragmented data routing architecture that inflates costs, weakens governance, and slows the value of data management. These challenges persist because most organizations still rely on ad-hoc connectors or tool-specific exports. Without centralized control, data routing remains fragmented, costly, and brittle.

Principles of Flexible Data Routing

For years, routing was treated as plumbing. Data moved from point A to point B, and as long as it arrived, the job was considered done. That mindset worked when there were only one or two destinations to feed. It does not hold up in today’s world of overlapping analytics platforms, compliance stores, SIEMs, and AI pipelines.

A modern data pipeline management platform introduces routing as a control layer. The question is no longer “can we move the data” but “how should this data be shaped, governed, and delivered across different consumers.” That shift requires a few guiding principles.

Collection should happen once, not dozens of times. Distribution should be deliberate, with each destination receiving data in the format and fidelity it needs. Governance should be embedded in the pipeline layer so that policies drive what is masked, retained, or enriched. Most importantly, routing must remain independent of any single tool. No SIEM, warehouse, or observability platform should define how all other systems receive their data.

These principles are less about mechanics than about posture. A smart, flexible, data routing architecture ensures efficiency at scale, governance and contextualized data, and automation. Together they represent an architectural stance that data deserves to travel with intent, shaped and delivered according to value.

The Benefits of Flexible, Smart, and AI-Enabled Routing

When routing is embedded in centralized data pipelines rather than bolted on afterward, the advantages extend far beyond cost. Flexible data routing, when combined with smart policies and AI-enabled automation, resolves the bottlenecks that plague legacy architectures and enables teams to work faster, cleaner, and with more confidence.

Streamlined operations

A single collection stream can serve multiple destinations simultaneously. This removes duplicate pipelines, reduces source load, and simplifies monitoring. Data moves through one managed layer instead of a patchwork, giving teams more predictable and efficient operations.

Agility at scale

New destinations no longer mean hand-built connectors or point-to-point rewiring. Whether it is an additional SIEM, a lake house in another cloud, or a new analytics platform, routing logic adapts quickly without forcing costly rebuilds or disrupting existing flows.

Data consistency and reliability

A centralized pipeline layer applies normalization, enrichment, and transformation uniformly. That consistency ensures investigations, queries, and models all receive structured data they can trust, reducing errors and making cross-platform analytics.

Compliance assurance

Policy-driven routing within the pipeline allows sensitive fields to be masked, transformed, or redirected as required. Instead of piecemeal controls at the tool level, compliance is enforced upstream, reducing risk of exposure and simplifying audits.

AI and analytics readiness

Well-shaped, contextual telemetry can be routed into data lakes or ML pipelines without additional preprocessing. The pipeline layer becomes the bridge between raw telemetry and AI-ready datasets.

Together, these benefits elevate routing from a background function to a strategic enabler. Enterprises gain efficiency, governance, and the agility to evolve their architectures as data needs grow.

Real-World Strategies and Use Cases

Flexible routing proves its value most clearly in practice. The following scenarios show how enterprises apply it to solve everyday challenges that brittle pipelines cannot handle:

Security + analytics dual routing

Authentication and firewall logs can flow into a SIEM for detection while also landing in a data lake for correlation and model training. Flexible data routing makes dual delivery possible, and smart routing ensures each destination receives the right format and context.

Compliance-driven routing

Personally identifiable information can be masked before reaching a SIEM but preserved in full within a compliant archive. Smart routing enforces policies upstream, ensuring compliance without slowing operations.

Performance optimization

Observability platforms can receive lightweight summaries to monitor uptime, while full-fidelity logs are routed into analytics systems for deep investigation. Flexible routing splits the streams, while AI-enabled capabilities can help tune flows dynamically as needs change.

AI/ML pipelines

Machine learning workloads demand structured, contextual data. With AI-enabled routing, telemetry is normalized and enriched before delivery, making it immediately usable for model training and inference.

Hybrid and multi-cloud delivery

Enterprises often operate across multiple regions and providers. Flexible routing ensures a single ingest stream can be distributed across clouds, while smart routing applies governance rules consistently and AI-enabled features optimize routing for resilience and compliance.

Building for the future with Flexible Data Routing

The data ecosystem is expanding faster than most architectures can keep up with. In the next few years, enterprises will add more AI pipelines, adopt more multi-cloud deployments, and face stricter compliance demands. Each of these shifts multiplies the number of destinations that need data and the complexity of delivering it reliably.

Flexible data routing offers a way forward enabling multi-destination delivery. Instead of hardwired connections or duplicating ingestion, organizations can ingest once and distribute everywhere, applying the right policies for each destination. This is what makes it possible to feed SIEM, observability, compliance, and AI platforms simultaneously without brittle integrations or runaway costs.

This approach is more than efficiency. It future-proofs data architectures. As enterprises add new platforms, shift workloads across clouds, or scale AI initiatives, multi-destination routing absorbs the change without forcing rework. Enterprises that establish this capability today are not just solving immediate pain points; they are creating a foundation that can absorb tomorrow’s complexity with confidence.

From Plumbing to Strategic Differentiator

Enterprises can’t step into the future with brittle, point-to-point pipelines. As data environments expand across clouds, platforms, and use cases, routing becomes the factor that decides whether architectures scale with confidence or collapse under their own weight. A modern routing layer isn’t optional anymore; it’s what holds complex ecosystems together.

With DataBahn, flexible data routing is part of an intelligent data layer that unifies collection, parsing, enrichment, governance, and automation. Together, these capabilities cut noise, prevent duplication, and deliver contextual data for every destination. The outcome is data management that flows with intent: no duplication, no blind spots, no wasted spend, just pipelines that are faster, cleaner, and built to last.

SIEM Evaluation Checklist for Modern Enterprises

Why SIEM Evaluation Shapes Migration Success

Choosing the right SIEM isn’t just about comparing features on a datasheet, it’s about proving the platform can handle your organization’s scale, data realities, and security priorities. As we noted in our SIEM Migration blog, evaluation is the critical precursor step. A SIEM migration can only be as successful as the evaluation that guides it.

Many teams struggle here. They test with narrow datasets, rely on vendor-led demos, or overlook integration challenges until late in the process. The result is a SIEM that looks strong in a proof-of-concept but falters in production, leading to costly rework and detection gaps.

To help avoid these traps, we’ve built a practical, CISO-ready SIEM Evaluation Checklist. It’s designed to give you a structured way to validate a SIEM’s fit before you commit, ensuring the platform you choose stands up to real-world demands.

Why SIEM Evaluations Fail and What It Costs You

For most security leaders, evaluating a SIEM feels deceptively straightforward. You run a proof-of-concept, push some data through, and check whether the detections fire. On paper, it looks like due diligence. In practice, it often leaves out the very conditions that determine whether the platform will hold up in production.

Most evaluation missteps trace back to the same few patterns. Understanding them is the first step to avoiding them.

- Limited, non-representative datasets

Testing only with a small or “clean” subset of logs hides ingest quirks, parser failures, and alert noise that show up at scale. - No predefined benchmarks

Without clear targets for detection rates, query latency, or ingest costs, it’s impossible to measure a SIEM fairly or defend the decision later. - Vendor-led demos instead of independent POCs

Demos showcase best-case scenarios and skip the messy realities of live integrations and noisy data — where risks usually hide. - Skipping integration and scalability tests

Breakage often appears when the SIEM connects with SOAR, ticketing, cloud telemetry, or concurrency-heavy queries, but many teams delay testing until migration is already underway.

Flawed evaluation means flawed migration. A weak choice at this stage multiplies complexity, cost, and operational risk down the line.

The SIEM Evaluation Checklist: 10 Must-Have Criteria

SIEM evaluation is one of the most important decisions your security team will make, and the way it’s run has lasting consequences. The goal is to gain enough confidence and clarity that the SIEM you choose can handle production workloads, integrate cleanly with your stack, and deliver measurable value. The checklist below highlights the criteria most CISOs and security leaders rely on when running a disciplined evaluation.

- Define objectives and risk profile

Start by clarifying what success looks like for your organization. Is it faster investigation times, stronger detection coverage, or reducing operating costs? Tie those goals to business and compliance risks so that evaluation criteria stay grounded in outcomes that matter.

- Test with realistic, representative data

Use diverse logs from across your environment, at production scale. Include messy, noisy data and consider synthetic logs to simulate edge cases without exposing sensitive records.

- Check data collection and normalization

Verify that the SIEM can handle logs from your most critical systems without custom development. Focus on parsing accuracy, normalization consistency, and whether enrichment happens automatically or requires heavy engineering effort.

Although, with DataBahn you can automate data parsing and transform data before it hits the SIEM.

- Assess detection and threat hunting

Re-run past incidents and inject test scenarios to confirm whether the SIEM detects them. Evaluate rule logic, correlation accuracy, and the speed of hunting workflows. Pay close attention to false positive and false negative rates.

- Evaluate UEBA capabilities

Many SIEMs now advertise UEBA, but maturity varies widely. Confirm whether behavior models adapt to your environment, surface useful anomalies, and support investigations instead of just creating more dashboards.

- Verify integration and operational fit

Check interoperability with your SOAR, case management, and cloud platforms. Assess how well it aligns with analyst workflows. A SIEM that creates friction for the team will never deliver its full potential.

- Measure scalability and performance

Test sustained ingestion rates and query latency under load. Run short bursts of high-volume data to see how the SIEM performs under pressure. Scalability failures discovered after go-live are among the costliest mistakes.

- Evaluate usability and manageability

Sit your analysts in front of the console and let them run searches, build dashboards, and manage cases. A tool that is intuitive for operators and predictable for administrators is far more likely to succeed in daily use.

- Model costs and total cost of ownership

Go beyond license pricing. Model ingest, storage, query, and scaling costs over time. Factor in engineering overhead and migration complexity. The most attractive quote up front can become the most expensive platform to operate later.

- Review vendor reliability and compliance support

Finally, evaluate the vendor itself. Look at their support model, product roadmap, and ability to meet compliance requirements like PCI DSS, HIPAA, or FedRAMP. A reliable partner matters as much as reliable technology.

Putting the Checklist into Action: POC and Scoring

The checklist gives you a structured way to evaluate a SIEM, but the real insight comes when you apply it in a proof of concept. A strong POC is time-boxed, fed with representative data, and designed to simulate the operational scenarios your SOC faces daily. That includes bringing in realistic log volumes, replaying past incidents, and integrating with existing workflows.

To make the outcomes actionable, score each SIEM against the checklist criteria. A simple weighted scoring model factoring in detection accuracy, integration fit, usability, scalability, and cost, turns the evaluation into measurable results that can be compared across vendors. This way, you move from opinion-driven choices to a clear, defensible decision supported by data.

Evaluating with Clarity, Migrating with Control

A successful SIEM strategy starts with disciplined evaluation. The right platform is only the right choice if it can handle your real-world data, scale with your operations, and deliver consistent detection coverage. That’s why using a structured checklist and a realistic POC isn’t just good practice — it’s essential.

With DataBahn in play, evaluation and migration become simpler. Our platform normalizes and routes telemetry before it ever reaches the SIEM, so you’re not limited by the parsing capacity or schema quirks of a particular tool. Sensitive data can be masked automatically, giving you the freedom to test and compare SIEMs safely without compliance risk.

The result: a stronger evaluation, a cleaner migration path, and a security team that stays firmly in control of its data strategy.

👉 Ready to put this into practice? Download the SIEM Evaluation Checklist for immediate use in your evaluation project.

.png)

Modernizing Legacy Data Infrastructure for the AI Era

For decades, enterprise data infrastructure has been built around systems designed for a slower and more predictable world. CRUD-driven applications, batch ETL processes, and static dashboards shaped how leaders accessed and interpreted information. These systems delivered reports after the fact, relying on humans to query data, build dashboards, analyze results, and take actions.

Hundreds and thousands of enterprise data decisions were based on this paradigm; but it no longer fits the scale or velocity of modern businesses. Global enterprises now run on an ocean of transactions, telemetry, and signals. Leaders expect decisions to be informed, not next quarter, or even next week – but right now. At the same time, AI is setting the bar for what’s possible: contextual reasoning, proactive detection, and natural language interactions with data.