Financial data flows are some of the most complex in any industry. Trades, transactions, positions, valuations, and reference data all pass through ETL jobs, market feeds, and risk engines before surfacing in reports. Multiply that across desks, asset classes, and jurisdictions, and tracing a single figure back to its origin becomes nearly impossible. This is why data lineage has become essential in financial services, giving institutions the ability to show how data moved and transformed across systems. So, when regulators, auditors, or even your own board ask: “Where did this number come from?” too many teams still don’t have a clear answer.

The stakes couldn’t be higher. Across frameworks like BCBS-239, the Financial Data Transparency Act, and emerging supervisory guidelines in Europe, APAC, and the Middle East, regulators are raising the bar. Banks that have adopted modern data lineage tools report 57% faster audit prep and ~40% gains in engineering productivity, yet progress remains slow — surveys show that fewer than 10% of global banks are fully compliant with BCBS-239 principles. The result is delayed audits, costly manual investigations, and growing skepticism from regulators and stakeholders alike.

The takeaway is simple: data lineage is no longer optional. It has become the foundation for compliance, risk model validation, and trust. For financial services, what data lineage means is simple: without it, compliance is reactive and fragile; with it, auditability and transparency become operational strengths.

In the rest of this blog, we’ll explore why lineage is so hard to achieve in financial services, what “good” looks like, and how modern approaches are closing the gap.

Why data lineage is so hard to achieve in Financial Services

If lineage were just “draw arrows between systems,” we’d be done. In the real world it fails because of technical edge cases and organizational friction, the stuff that makes tracing a number feel like detective work.

Siloed ownership and messy handoffs

Trade, market, reference and risk systems are often owned by separate teams with different priorities. A single calculation can touch five teams and ten systems; tracing it requires stepping across those boundaries and reconciling different glossaries and operational practices. This isn’t just technical overhead but an ownership problem that breaks automated lineage capture.

Opaque, undocumented transforms in the middle

Lineage commonly breaks inside ETL jobs, bespoke SQL, or one-off spreadsheets. Those transformation steps encode business logic that rarely gets cataloged, and regulators want to know what logic ran, who changed it, and when. That gap is one of the recurring blockers to proving traceability.

Temporal and model lineage

Financial reporting and model validation require not just “where did this value come from?” but “what did it look like at time T?” Capturing temporal snapshots and ensuring you can reconstruct the exact input set for a historical run (with schema versions, parameter sets, and market snapshots) adds another layer of complexity most lineage tools don’t handle out of the box.

Scaling lineage without runaway costs

Lineage at scale is expensive. Streaming trades, tick data and high-cardinality reference tables generate huge volumes of metadata if you try to capture full, row-level lineage. Teams need to balance fidelity, cost, and query ability, and that trade-off is a frequent operational headache.

Organizational friction and change management

Technical fixes only work when governance, process and incentives change too. Lineage rollout touches risk, finance, engineering and compliance, aligning those stakeholders, enforcing cataloging discipline, and maintaining lineage over time is a people problem as much as a technology one.

The real challenge isn’t drawing arrows between systems but designing lineage that regulators can trust, engineers can maintain, and auditors can use in real time. That’s the standard the industry is now being measured against.

What good Data Lineage looks like in finance

Great lineage in financial services doesn’t look like a prettier diagram; it feels like control. The moment an auditor asks, “Where did this number come from?” the answer should take minutes, not weeks. That’s the benchmark.

It’s continuous, not reactive.

Lineage isn’t something you piece together after an audit request. It’s captured in real time as data flows — across trades, models, and reports — so the evidence is always ready.

It’s explainable to both engineers and auditors.

Engineers should see schema versions, transformations, and dependencies. Auditors should see clear traceability and business definitions. Good lineage bridges both worlds without translation exercises.

It scales with the business.

From millions of daily trades to real-time model recalculations, lineage must capture detail without exploding into unusable metadata. That means selective fidelity, efficient storage, and fast query ability built in.

It integrates governance, not adds it later.

Lineage should carry sensitivity tags, policy markers, and glossary links as data moves. Compliance is strongest when it’s embedded upstream, not enforced after the fact.

The point is simple: an effective data lineage makes defensibility the default. It doesn’t slow down data flows or burden teams with extra work. Instead, it builds confidence that every calculation, every report, and every disclosure can be traced and trusted.

Databahn in practice: Data Lineage as part of the flow

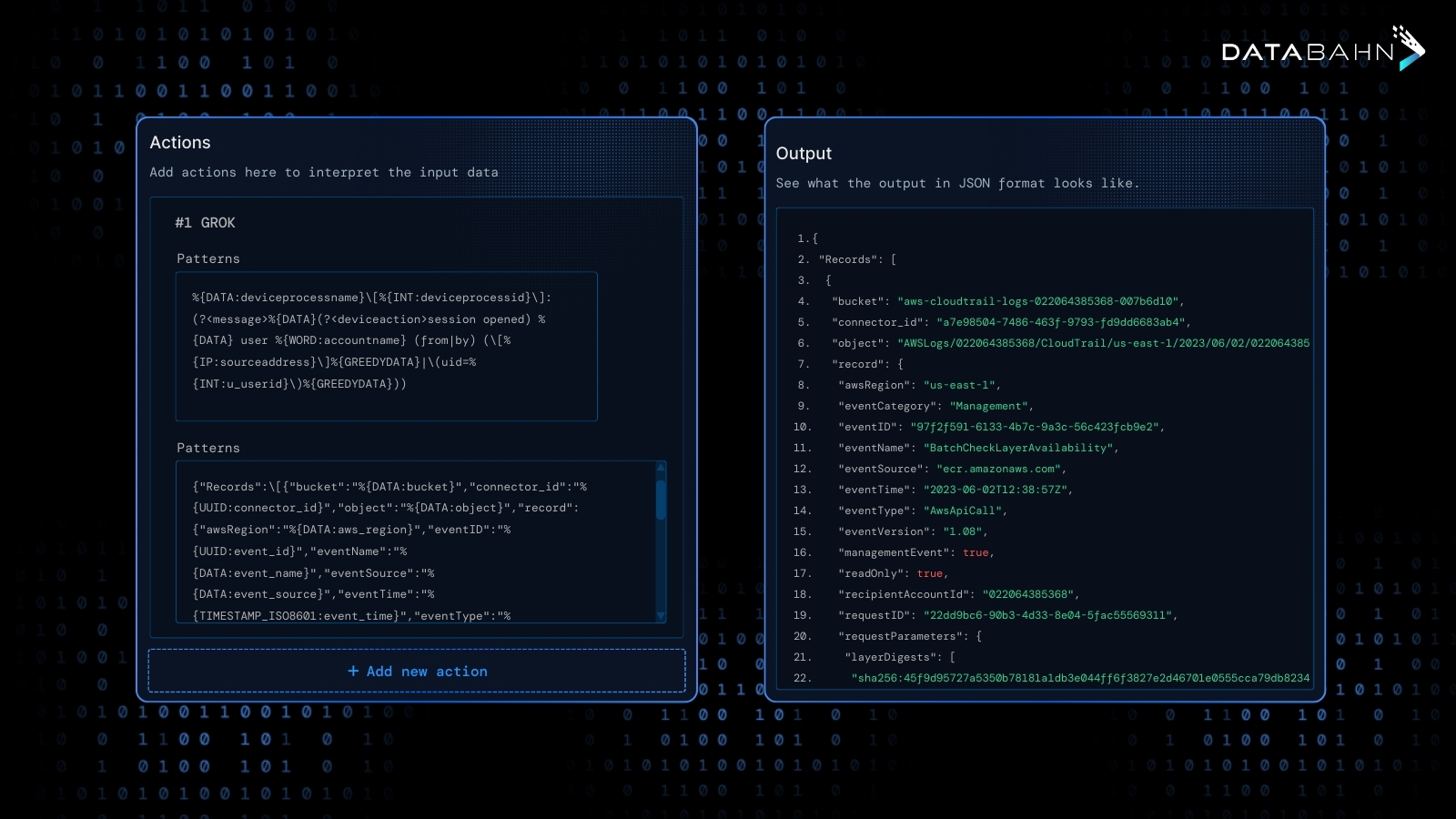

Databahn captures lineage as data moves, not after it lands. Rather than relying on manual cataloging, the platform instruments ingestion, parsing, transformation and routing layers so every change — schema update, join, enrichment or filter — is recorded as part of normal pipeline execution. That means auditors, risk teams and engineers can reconstruct a metric, replay a run, or trace a root cause without digging through ad-hoc scripts or spreadsheets.

In production, that capture is combined with selective fidelity controls, snapshotting for time-travel, and business-friendly lineage views so traceability is both precise for engineers and usable for non-technical stakeholders.

Here are a few of the key features in Databahn’s arsenal and how they enable practical lineage:

- Seamless lineage with Highway

Every routing and transformation is tracked natively, giving a complete view from source to report without blind spots. - Real-time visibility and health monitoring

Continuous observability across pipelines detects lineage breaks, schema drift, or anomalies as they happen — not months later. - Governance with history recall and replay

Metadata tagging and audit trails preserve data history so any past report or model run can be reconstructed exactly as it appeared. - In-flight sensitive data handling

PII and regulated fields can be masked, quarantined, or tagged in motion, with those transformations recorded as part of the audit trail. - Schema drift detection and normalization

Automatic detection and normalization keep lineage consistent when upstream systems change, preventing gaps that undermine compliance.

The result is lineage that financial institutions can rely on, not just to pass regulatory checks, but to build lasting trust in their reporting and risk models. With Databahn, data lineage becomes a built-in capability, giving institutions confidence that every number can be traced, defended, and trusted.

The future of Data Lineage in finance

Lineage is moving from a compliance checkbox to a living capability. Regulators worldwide are raising expectations, from the Financial Data Transparency Act (FDTA) in the U.S., to ECB/EBA supervisory guidance in Europe, to data risk frameworks in APAC and the Middle East. Across markets, the signal is the same: traceability can’t be partial or reactive, it has to be continuous.

AI is at the center of this shift. Where teams once relied on static diagrams or manual cataloging, AI now powers:

- Automated lineage capture – extracting flows directly from SQL, ETL code, and pipeline metadata.

- Drift and anomaly detection – spotting schema changes or unusual transformations before they become audit findings.

- Metadata enrichment – linking technical fields to business definitions, tagging sensitive data, and surfacing lineage in auditor-friendly terms.

- Proactive remediation – recommending fixes, rerouting flows, or even self-healing pipelines when lineage breaks.

This is also where modern platforms like Databahn are heading. Rather than stop at automation, Databahn applies agentic AI that learns from pipelines, builds context, and acts, whether that’s updating lineage after a schema drift, tagging newly discovered sensitive fields, or ensuring audit trails stay complete.

Looking forward, financial institutions will also see exploration of immutable lineage records (using distributed ledger technologies) and standardized taxonomies to reduce cross-border compliance friction. But the trajectory is already clear: lineage is becoming real-time, AI-assisted, and regulator-ready by default, and platforms with agentic AI at their core are leading that evolution.

Conclusion: Lineage as the Foundation of Trust

Financial institutions can’t afford to treat lineage as a back-office detail. It’s become the foundation of compliance, the enabler of model validation, and the basis of trust in every reported number.

As regulators raise the bar and AI reshapes data management, the institutions that thrive will be the ones that make traceability a built-in capability, not an afterthought. That’s why modern platforms like DataBahn are designed with lineage at the core. By capturing data in motion, applying governance upstream, and leveraging agentic AI to keep pipelines audit-ready, they make defensibility the default.

If your institution is asking tougher questions about “where did this number come from?”, now is the time to strengthen your lineage strategy. Explore how Databahn can help make compliance, trust, and auditability a natural outcome of your data pipelines. Get in touch for a demo!

.jpg)

.jpg)

.jpg)

.png)

.png)

.avif)

.avif)